mirror of

https://github.com/kevinveenbirkenbach/docker-volume-backup.git

synced 2026-02-02 11:04:06 +00:00

Compare commits

1 Commits

v1.5.0

...

feature-my

| Author | SHA1 | Date | |

|---|---|---|---|

| 932595128c |

7

.github/FUNDING.yml

vendored

7

.github/FUNDING.yml

vendored

@@ -1,7 +0,0 @@

|

||||

github: kevinveenbirkenbach

|

||||

|

||||

patreon: kevinveenbirkenbach

|

||||

|

||||

buy_me_a_coffee: kevinveenbirkenbach

|

||||

|

||||

custom: https://s.veen.world/paypaldonate

|

||||

91

.github/workflows/ci.yml

vendored

91

.github/workflows/ci.yml

vendored

@@ -1,91 +0,0 @@

|

||||

name: CI (make tests, stable, publish)

|

||||

|

||||

on:

|

||||

push:

|

||||

branches: ["**"]

|

||||

tags: ["v*.*.*"] # SemVer tags like v1.2.3

|

||||

pull_request:

|

||||

|

||||

permissions:

|

||||

contents: write # push/update 'stable' tag

|

||||

packages: write # push to GHCR

|

||||

|

||||

env:

|

||||

IMAGE_NAME: baudolo

|

||||

REGISTRY: ghcr.io

|

||||

IMAGE_REPO: ${{ github.repository }}

|

||||

|

||||

jobs:

|

||||

test:

|

||||

name: make test

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

steps:

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v4

|

||||

|

||||

- name: Show docker info

|

||||

run: |

|

||||

docker version

|

||||

docker info

|

||||

|

||||

- name: Run all tests via Makefile

|

||||

run: |

|

||||

make test

|

||||

|

||||

- name: Upload E2E artifacts (always)

|

||||

if: always()

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

name: e2e-artifacts

|

||||

path: artifacts

|

||||

if-no-files-found: ignore

|

||||

|

||||

stable_and_publish:

|

||||

name: Mark stable + publish image (SemVer tags only)

|

||||

needs: [test]

|

||||

runs-on: ubuntu-latest

|

||||

if: startsWith(github.ref, 'refs/tags/v')

|

||||

|

||||

steps:

|

||||

- name: Checkout (full history for tags)

|

||||

uses: actions/checkout@v4

|

||||

with:

|

||||

fetch-depth: 0

|

||||

|

||||

- name: Derive version from tag

|

||||

id: ver

|

||||

run: |

|

||||

TAG="${GITHUB_REF#refs/tags/}" # v1.2.3

|

||||

echo "tag=${TAG}" >> "$GITHUB_OUTPUT"

|

||||

|

||||

- name: Mark 'stable' git tag (force update)

|

||||

run: |

|

||||

git config user.name "github-actions[bot]"

|

||||

git config user.email "github-actions[bot]@users.noreply.github.com"

|

||||

git tag -f stable "${GITHUB_SHA}"

|

||||

git push -f origin stable

|

||||

|

||||

- name: Login to GHCR

|

||||

uses: docker/login-action@v3

|

||||

with:

|

||||

registry: ${{ env.REGISTRY }}

|

||||

username: ${{ github.actor }}

|

||||

password: ${{ secrets.GITHUB_TOKEN }}

|

||||

|

||||

- name: Build image (Makefile)

|

||||

run: |

|

||||

make build

|

||||

|

||||

- name: Tag image for registry

|

||||

run: |

|

||||

# local image built by Makefile is: baudolo:local

|

||||

docker tag "${IMAGE_NAME}:local" "${REGISTRY}/${IMAGE_REPO}:${{ steps.ver.outputs.tag }}"

|

||||

docker tag "${IMAGE_NAME}:local" "${REGISTRY}/${IMAGE_REPO}:stable"

|

||||

docker tag "${IMAGE_NAME}:local" "${REGISTRY}/${IMAGE_REPO}:sha-${GITHUB_SHA::12}"

|

||||

|

||||

- name: Push image

|

||||

run: |

|

||||

docker push "${REGISTRY}/${IMAGE_REPO}:${{ steps.ver.outputs.tag }}"

|

||||

docker push "${REGISTRY}/${IMAGE_REPO}:stable"

|

||||

docker push "${REGISTRY}/${IMAGE_REPO}:sha-${GITHUB_SHA::12}"

|

||||

5

.gitignore

vendored

5

.gitignore

vendored

@@ -1,5 +0,0 @@

|

||||

__pycache__

|

||||

artifacts/

|

||||

*.egg-info

|

||||

dist/

|

||||

build/

|

||||

2

.travis.yml

Normal file

2

.travis.yml

Normal file

@@ -0,0 +1,2 @@

|

||||

language: shell

|

||||

script: shellcheck $(find . -type f -name '*.sh')

|

||||

42

CHANGELOG.md

42

CHANGELOG.md

@@ -1,42 +0,0 @@

|

||||

## [1.5.0] - 2026-01-31

|

||||

|

||||

* * Make `databases.csv` optional: missing or empty files now emit warnings and no longer break backups

|

||||

* Fix Docker CLI compatibility by switching to `docker-ce-cli` and required build tools

|

||||

|

||||

|

||||

## [1.4.0] - 2026-01-31

|

||||

|

||||

* Baudolo now restarts Docker Compose stacks in a wrapper-aware way (with a `docker compose` fallback), ensuring that all Compose overrides and env files are applied identically to the Infinito.Nexus workflow.

|

||||

|

||||

|

||||

## [1.3.0] - 2026-01-10

|

||||

|

||||

* Empty databases.csv no longer causes baudolo-seed to fail

|

||||

|

||||

|

||||

## [1.2.0] - 2025-12-29

|

||||

|

||||

* * Introduced **`--dump-only-sql`** mode for reliable, SQL-only database backups (replaces `--dump-only`).

|

||||

* Database configuration in `databases.csv` is now **strict and explicit** (`*` or concrete database name only).

|

||||

* **PostgreSQL cluster backups** are supported via `*`.

|

||||

* SQL dumps are written **atomically** to avoid corrupted or empty files.

|

||||

* Backups are **smarter and faster**: ignored volumes are skipped early, file backups run only when needed.

|

||||

* Improved reliability through expanded end-to-end tests and safer defaults.

|

||||

|

||||

|

||||

## [1.1.1] - 2025-12-28

|

||||

|

||||

* * **Backup:** In ***--dump-only-sql*** mode, fall back to file backups with a warning when no database dump can be produced (e.g. missing `databases.csv` entry).

|

||||

|

||||

|

||||

## [1.1.0] - 2025-12-28

|

||||

|

||||

* * **Backup:** Log a warning and skip database dumps when no databases.csv entry is present instead of raising an exception; introduce module-level logging and apply formatting cleanups across backup/restore code and tests.

|

||||

* **CLI:** Switch to an FHS-compliant default backup directory (/var/lib/backup) and use a stable default repository name instead of dynamic detection.

|

||||

* **Maintenance:** Update mirror configuration and ignore generated .egg-info files.

|

||||

|

||||

|

||||

## [1.0.0] - 2025-12-27

|

||||

|

||||

* Official Release 🥳

|

||||

|

||||

37

Dockerfile

37

Dockerfile

@@ -1,37 +0,0 @@

|

||||

# syntax=docker/dockerfile:1

|

||||

FROM python:3.11-slim

|

||||

|

||||

WORKDIR /app

|

||||

|

||||

# Base deps for build/runtime + docker repo key

|

||||

RUN apt-get update && apt-get install -y --no-install-recommends \

|

||||

make \

|

||||

rsync \

|

||||

ca-certificates \

|

||||

bash \

|

||||

curl \

|

||||

gnupg \

|

||||

&& rm -rf /var/lib/apt/lists/*

|

||||

|

||||

# Install Docker CLI (docker-ce-cli) from Docker's official apt repo

|

||||

RUN bash -lc "set -euo pipefail \

|

||||

&& install -m 0755 -d /etc/apt/keyrings \

|

||||

&& curl -fsSL https://download.docker.com/linux/debian/gpg \

|

||||

| gpg --dearmor -o /etc/apt/keyrings/docker.gpg \

|

||||

&& chmod a+r /etc/apt/keyrings/docker.gpg \

|

||||

&& . /etc/os-release \

|

||||

&& echo \"deb [arch=\$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/debian \${VERSION_CODENAME} stable\" \

|

||||

> /etc/apt/sources.list.d/docker.list \

|

||||

&& apt-get update \

|

||||

&& apt-get install -y --no-install-recommends docker-ce-cli \

|

||||

&& rm -rf /var/lib/apt/lists/*"

|

||||

|

||||

# Fail fast if docker client is missing

|

||||

RUN docker version || true

|

||||

RUN command -v docker

|

||||

|

||||

COPY . .

|

||||

RUN make install

|

||||

|

||||

ENV PYTHONUNBUFFERED=1

|

||||

CMD ["baudolo", "--help"]

|

||||

4

MIRRORS

4

MIRRORS

@@ -1,4 +0,0 @@

|

||||

git@github.com:kevinveenbirkenbach/backup-docker-to-local.git

|

||||

ssh://git@git.veen.world:2201/kevinveenbirkenbach/backup-docker-to-local.git

|

||||

ssh://git@code.infinito.nexus:2201/kevinveenbirkenbach/backup-docker-to-local.git

|

||||

https://pypi.org/project/backup-docker-to-local/

|

||||

57

Makefile

57

Makefile

@@ -1,57 +0,0 @@

|

||||

.PHONY: install build \

|

||||

test-e2e test test-unit test-integration

|

||||

|

||||

# Default python if no venv is active

|

||||

PY_DEFAULT ?= python3

|

||||

|

||||

IMAGE_NAME ?= baudolo

|

||||

IMAGE_TAG ?= local

|

||||

IMAGE := $(IMAGE_NAME):$(IMAGE_TAG)

|

||||

|

||||

install:

|

||||

@set -eu; \

|

||||

PY="$(PY_DEFAULT)"; \

|

||||

if [ -n "$${VIRTUAL_ENV:-}" ] && [ -x "$${VIRTUAL_ENV}/bin/python" ]; then \

|

||||

PY="$${VIRTUAL_ENV}/bin/python"; \

|

||||

fi; \

|

||||

echo ">>> Using python: $$PY"; \

|

||||

"$$PY" -m pip install --upgrade pip; \

|

||||

"$$PY" -m pip install -e .; \

|

||||

command -v baudolo >/dev/null 2>&1 || { \

|

||||

echo "ERROR: baudolo not found on PATH after install"; \

|

||||

exit 2; \

|

||||

}; \

|

||||

baudolo --help >/dev/null 2>&1 || true

|

||||

|

||||

# ------------------------------------------------------------

|

||||

# Build the baudolo Docker image

|

||||

# ------------------------------------------------------------

|

||||

build:

|

||||

@echo ">> Building Docker image $(IMAGE)"

|

||||

docker build -t $(IMAGE) .

|

||||

|

||||

clean:

|

||||

git clean -fdX .

|

||||

|

||||

# ------------------------------------------------------------

|

||||

# Run E2E tests inside the container (Docker socket required)

|

||||

# ------------------------------------------------------------

|

||||

# E2E via isolated Docker-in-Docker (DinD)

|

||||

# - depends on local image build

|

||||

# - starts a DinD daemon container on a dedicated network

|

||||

# - loads the freshly built image into DinD

|

||||

# - runs the unittest suite inside a container that talks to DinD via DOCKER_HOST

|

||||

test-e2e: clean build

|

||||

@bash scripts/test-e2e.sh

|

||||

|

||||

test: test-unit test-integration test-e2e

|

||||

|

||||

test-unit: clean build

|

||||

@echo ">> Running unit tests"

|

||||

@docker run --rm -t $(IMAGE) \

|

||||

bash -lc 'python -m unittest discover -t . -s tests/unit -p "test_*.py" -v'

|

||||

|

||||

test-integration: clean build

|

||||

@echo ">> Running integration tests"

|

||||

@docker run --rm -t $(IMAGE) \

|

||||

bash -lc 'python -m unittest discover -t . -s tests/integration -p "test_*.py" -v'

|

||||

225

README.md

225

README.md

@@ -1,217 +1,58 @@

|

||||

# baudolo – Deterministic Backup & Restore for Docker Volumes 📦🔄

|

||||

[](https://github.com/sponsors/kevinveenbirkenbach) [](https://www.patreon.com/c/kevinveenbirkenbach) [](https://buymeacoffee.com/kevinveenbirkenbach) [](https://s.veen.world/paypaldonate) [](https://www.gnu.org/licenses/agpl-3.0) [](https://www.docker.com) [](https://www.python.org) [](https://github.com/kevinveenbirkenbach/backup-docker-to-local/stargazers)

|

||||

# docker-volume-backup

|

||||

[](./LICENSE.txt) [](https://travis-ci.org/kevinveenbirkenbach/docker-volume-backup)

|

||||

|

||||

## goal

|

||||

This script backups all docker-volumes with the help of rsync.

|

||||

|

||||

`baudolo` is a backup and restore system for Docker volumes with

|

||||

**mandatory file backups** and **explicit, deterministic database dumps**.

|

||||

It is designed for environments with many Docker services where:

|

||||

- file-level backups must always exist

|

||||

- database dumps must be intentional, predictable, and auditable

|

||||

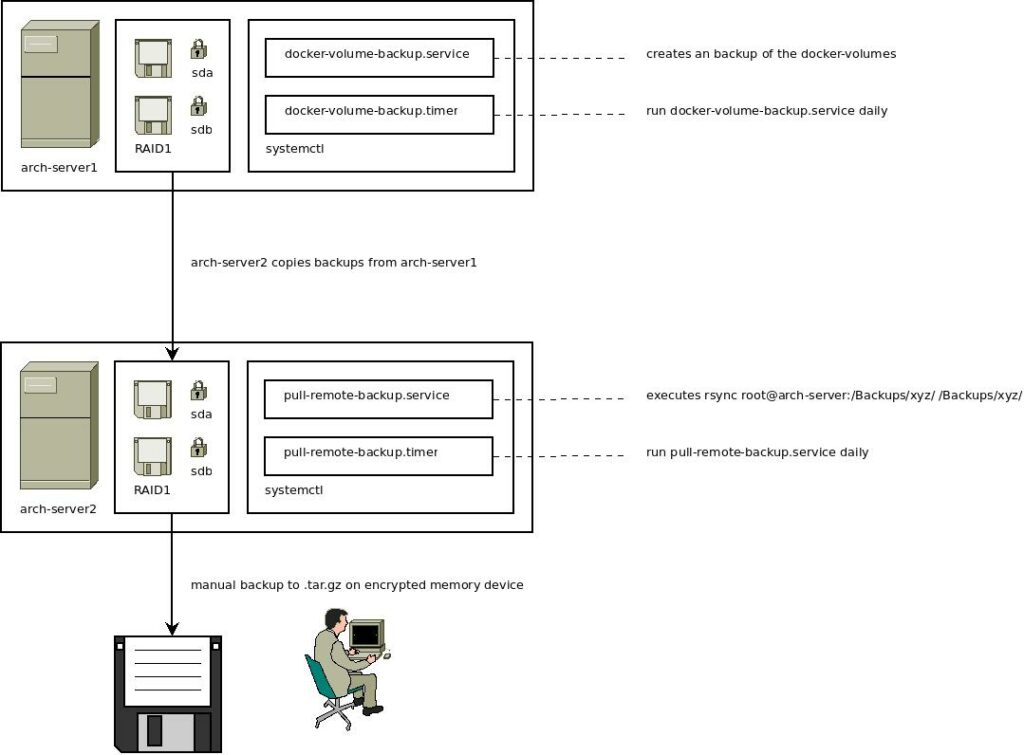

## scheme

|

||||

It is part of the following scheme:

|

||||

|

||||

Further information you will find [in this blog post](https://www.veen.world/2020/12/26/how-i-backup-dedicated-root-servers/).

|

||||

|

||||

## ✨ Key Features

|

||||

|

||||

- 📦 Incremental Docker volume backups using `rsync --link-dest`

|

||||

- 🗄 Optional SQL dumps for:

|

||||

- PostgreSQL

|

||||

- MariaDB / MySQL

|

||||

- 🌱 Explicit database definition for SQL backups (no auto-discovery)

|

||||

- 🧾 Backup integrity stamping via `dirval` (Python API)

|

||||

- ⏸ Automatic container stop/start when required for consistency

|

||||

- 🚫 Whitelisting of containers that do not require stopping

|

||||

- ♻️ Modular, maintainable Python architecture

|

||||

|

||||

|

||||

## 🧠 Core Concept (Important!)

|

||||

|

||||

`baudolo` **separates file backups from database dumps**.

|

||||

|

||||

- **Docker volumes are always backed up at file level**

|

||||

- **SQL dumps are created only for explicitly defined databases**

|

||||

|

||||

This results in the following behavior:

|

||||

|

||||

| Database defined | File backup | SQL dump |

|

||||

|------------------|-------------|----------|

|

||||

| No | ✔ yes | ✘ no |

|

||||

| Yes | ✔ yes | ✔ yes |

|

||||

|

||||

## 📁 Backup Layout

|

||||

|

||||

Backups are stored in a deterministic, fully nested structure:

|

||||

|

||||

```text

|

||||

<backups-dir>/

|

||||

└── <machine-hash>/

|

||||

└── <repo-name>/

|

||||

└── <timestamp>/

|

||||

└── <volume-name>/

|

||||

├── files/

|

||||

└── sql/

|

||||

└── <database>.backup.sql

|

||||

```

|

||||

|

||||

### Meaning of each level

|

||||

|

||||

* `<machine-hash>`

|

||||

SHA256 hash of `/etc/machine-id` (host separation)

|

||||

|

||||

* `<repo-name>`

|

||||

Logical backup namespace (project / stack)

|

||||

|

||||

* `<timestamp>`

|

||||

Backup generation (`YYYYMMDDHHMMSS`)

|

||||

|

||||

* `<volume-name>`

|

||||

Docker volume name

|

||||

|

||||

* `files/`

|

||||

Incremental file backup (rsync)

|

||||

|

||||

* `sql/`

|

||||

Optional SQL dumps (only for defined databases)

|

||||

|

||||

## 🚀 Installation

|

||||

|

||||

### Local (editable install)

|

||||

## Backup

|

||||

Execute:

|

||||

|

||||

```bash

|

||||

python3 -m venv .venv

|

||||

source .venv/bin/activate

|

||||

pip install -e .

|

||||

./docker-volume-backup.sh

|

||||

```

|

||||

|

||||

## 🌱 Database Definition (SQL Backup Scope)

|

||||

|

||||

### How SQL backups are defined

|

||||

|

||||

`baudolo` creates SQL dumps **only** for databases that are **explicitly defined**

|

||||

via configuration (e.g. a databases definition file or seeding step).

|

||||

|

||||

If a database is **not defined**:

|

||||

|

||||

* its Docker volume is still backed up (files)

|

||||

* **no SQL dump is created**

|

||||

|

||||

> No database definition → file backup only

|

||||

> Database definition present → file backup + SQL dump

|

||||

|

||||

### Why explicit definition?

|

||||

|

||||

`baudolo` does **not** inspect running containers to guess databases.

|

||||

|

||||

Databases must be explicitly defined to guarantee:

|

||||

|

||||

* deterministic backups

|

||||

* predictable restore behavior

|

||||

* reproducible environments

|

||||

* zero accidental production data exposure

|

||||

|

||||

### Required database metadata

|

||||

|

||||

Each database definition provides:

|

||||

|

||||

* database instance (container or logical instance)

|

||||

* database name

|

||||

* database user

|

||||

* database password

|

||||

|

||||

This information is used by `baudolo` to execute

|

||||

`pg_dump`, `pg_dumpall`, or `mariadb-dump`.

|

||||

|

||||

## 💾 Running a Backup

|

||||

## Recover

|

||||

Execute:

|

||||

|

||||

```bash

|

||||

baudolo \

|

||||

--compose-dir /srv/docker \

|

||||

--databases-csv /etc/baudolo/databases.csv \

|

||||

--database-containers central-postgres central-mariadb \

|

||||

--images-no-stop-required alpine postgres mariadb mysql \

|

||||

--images-no-backup-required redis busybox

|

||||

./docker-volume-recover.sh {{volume_name}} {{backup_path}}

|

||||

```

|

||||

|

||||

### Common Backup Flags

|

||||

|

||||

| Flag | Description |

|

||||

| --------------- | ------------------------------------------- |

|

||||

| `--everything` | Always stop containers and re-run rsync |

|

||||

| `--dump-only-sql`| Skip file backups only for DB volumes when dumps succeed; non-DB volumes are still backed up; fallback to files if no dump. |

|

||||

| `--shutdown` | Do not restart containers after backup |

|

||||

| `--backups-dir` | Backup root directory (default: `/Backups`) |

|

||||

| `--repo-name` | Backup namespace under machine hash |

|

||||

|

||||

## ♻️ Restore Operations

|

||||

|

||||

### Restore Volume Files

|

||||

## Debug

|

||||

To checkout what's going on in the mount container type in the following command:

|

||||

|

||||

```bash

|

||||

baudolo-restore files \

|

||||

my-volume \

|

||||

<machine-hash> \

|

||||

<version> \

|

||||

--backups-dir /Backups \

|

||||

--repo-name my-repo

|

||||

docker run -it --entrypoint /bin/sh --rm --volumes-from {{container_name}} -v /Backups/:/Backups/ kevinveenbirkenbach/alpine-rsync

|

||||

```

|

||||

## Manual Backup

|

||||

rsync -aPvv '***{{source_path}}***/' ***{{destination_path}}***";

|

||||

|

||||

Restore into a **different target volume**:

|

||||

## Test

|

||||

Delete the volume.

|

||||

|

||||

```bash

|

||||

baudolo-restore files \

|

||||

target-volume \

|

||||

<machine-hash> \

|

||||

<version> \

|

||||

--source-volume source-volume

|

||||

docker rm -f container-name

|

||||

docker volume rm volume-name

|

||||

```

|

||||

|

||||

### Restore PostgreSQL

|

||||

Recover the volume:

|

||||

|

||||

```bash

|

||||

baudolo-restore postgres \

|

||||

my-volume \

|

||||

<machine-hash> \

|

||||

<version> \

|

||||

--container postgres \

|

||||

--db-name appdb \

|

||||

--db-password secret \

|

||||

--empty

|

||||

docker volume create volume-name

|

||||

docker run --rm -v volume-name:/recover/ -v ~/backup/:/backup/ "kevinveenbirkenbach/alpine-rsync" sh -c "rsync -avv /backup/ /recover/"

|

||||

```

|

||||

|

||||

### Restore MariaDB / MySQL

|

||||

Restart the container.

|

||||

|

||||

```bash

|

||||

baudolo-restore mariadb \

|

||||

my-volume \

|

||||

<machine-hash> \

|

||||

<version> \

|

||||

--container mariadb \

|

||||

--db-name shopdb \

|

||||

--db-password secret \

|

||||

--empty

|

||||

```

|

||||

## Optimation

|

||||

This setup script is not optimized yet for performance. Please optimized this script for performance if you want to use it in a professional environment.

|

||||

|

||||

> `baudolo` automatically detects whether `mariadb` or `mysql`

|

||||

> is available inside the container

|

||||

|

||||

## 🔍 Backup Scheme

|

||||

|

||||

The backup mechanism uses incremental backups with rsync and stamps directories with a unique hash. For more details on the backup scheme, check out [this blog post](https://blog.veen.world/blog/2020/12/26/how-i-backup-dedicated-root-servers/).

|

||||

|

||||

|

||||

## 👨💻 Author

|

||||

|

||||

**Kevin Veen-Birkenbach**

|

||||

- 📧 [kevin@veen.world](mailto:kevin@veen.world)

|

||||

- 🌐 [https://www.veen.world/](https://www.veen.world/)

|

||||

|

||||

## 📜 License

|

||||

|

||||

This project is licensed under the **GNU Affero General Public License v3.0**. See the [LICENSE](./LICENSE) file for details.

|

||||

|

||||

## 🔗 More Information

|

||||

|

||||

- [Docker Volumes Documentation](https://docs.docker.com/storage/volumes/)

|

||||

- [Docker Backup Volumes Blog](https://blog.ssdnodes.com/blog/docker-backup-volumes/)

|

||||

- [Backup Strategies](https://en.wikipedia.org/wiki/Incremental_backup#Incremental)

|

||||

|

||||

---

|

||||

|

||||

Happy Backing Up! 🚀🔐

|

||||

## More information

|

||||

- https://blog.ssdnodes.com/blog/docker-backup-volumes/

|

||||

- https://www.baculasystems.com/blog/docker-backup-containers/

|

||||

- https://hub.docker.com/_/mariadb

|

||||

|

||||

46

docker-volume-backup.sh

Normal file

46

docker-volume-backup.sh

Normal file

@@ -0,0 +1,46 @@

|

||||

#!/bin/bash

|

||||

# Just backups volumes of running containers

|

||||

# If rsync stucks consider:

|

||||

# @see https://stackoverflow.com/questions/20773118/rsync-suddenly-hanging-indefinitely-during-transfers

|

||||

#

|

||||

backup_time="$(date '+%Y%m%d%H%M%S')";

|

||||

backups_folder="/Backups/";

|

||||

repository_name="$(cd "$(dirname "$(readlink -f "${0}")")" && basename `git rev-parse --show-toplevel`)";

|

||||

machine_id="$(sha256sum /etc/machine-id | head -c 64)";

|

||||

backup_repository_folder="$backups_folder$machine_id/$repository_name/";

|

||||

for volume_name in $(docker volume ls --format '{{.Name}}');

|

||||

do

|

||||

echo "start backup routine: $volume_name";

|

||||

for container_name in $(docker ps -a --filter volume="$volume_name" --format '{{.Names}}');

|

||||

do

|

||||

echo "stop container: $container_name" && docker stop "$container_name"

|

||||

for source_path in $(docker inspect --format "{{ range .Mounts }}{{ if eq .Type \"volume\"}}{{ if eq .Name \"$volume_name\"}}{{ println .Destination }}{{ end }}{{ end }}{{ end }}" "$container_name");

|

||||

do

|

||||

destination_path="$backup_repository_folder""latest/$volume_name";

|

||||

raw_destination_path="$destination_path/raw"

|

||||

prepared_destination_path="$destination_path/prepared"

|

||||

log_path="$backup_repository_folder""log.txt";

|

||||

backup_dir_path="$backup_repository_folder""diffs/$backup_time/$volume_name";

|

||||

raw_backup_dir_path="$backup_dir_path/raw";

|

||||

prepared_backup_dir_path="$backup_dir_path/prepared";

|

||||

if [ -d "$destination_path" ]

|

||||

then

|

||||

echo "backup volume: $volume_name";

|

||||

else

|

||||

echo "first backup volume: $volume_name"

|

||||

mkdir -vp "$raw_destination_path";

|

||||

mkdir -vp "$raw_backup_dir_path";

|

||||

mkdir -vp "$prepared_destination_path";

|

||||

mkdir -vp "$prepared_backup_dir_path";

|

||||

fi

|

||||

docker run --rm --volumes-from "$container_name" -v "$backups_folder:$backups_folder" "kevinveenbirkenbach/alpine-rsync" sh -c "

|

||||

rsync -abP --delete --delete-excluded --log-file=$log_path --backup-dir=$raw_backup_dir_path '$source_path/' $raw_destination_path";

|

||||

done

|

||||

echo "start container: $container_name" && docker start "$container_name";

|

||||

if [ "mariadb" == "$(docker inspect --format='{{.Config.Image}}' $container_name)"]

|

||||

then

|

||||

docker exec some-mariadb sh -c 'exec mysqldump --all-databases -uroot -p"$MARIADB_ROOT_PASSWORD"' > /some/path/on/your/host/all-databases.sql

|

||||

fi

|

||||

done

|

||||

echo "end backup routine: $volume_name";

|

||||

done

|

||||

6

docker-volume-recover.sh

Normal file

6

docker-volume-recover.sh

Normal file

@@ -0,0 +1,6 @@

|

||||

#!/bin/bash

|

||||

# @param $1 Volume-Name

|

||||

volume_name="$1"

|

||||

backup_path="$2"

|

||||

docker volume create "$volume_name"

|

||||

docker run --rm -v "$volume_name:/recover/" -v "$backup_path:/backup/" "kevinveenbirkenbach/alpine-rsync" sh -c "rsync -avv /backup/ /recover/"

|

||||

@@ -1,29 +0,0 @@

|

||||

[build-system]

|

||||

requires = ["setuptools>=69", "wheel"]

|

||||

build-backend = "setuptools.build_meta"

|

||||

|

||||

[project]

|

||||

name = "backup-docker-to-local"

|

||||

version = "1.5.0"

|

||||

description = "Backup Docker volumes to local with rsync and optional DB dumps."

|

||||

readme = "README.md"

|

||||

requires-python = ">=3.9"

|

||||

license = { text = "AGPL-3.0-or-later" }

|

||||

authors = [{ name = "Kevin Veen-Birkenbach" }]

|

||||

|

||||

dependencies = [

|

||||

"pandas",

|

||||

"dirval",

|

||||

]

|

||||

|

||||

[project.scripts]

|

||||

baudolo = "baudolo.backup.__main__:main"

|

||||

baudolo-restore = "baudolo.restore.__main__:main"

|

||||

baudolo-seed = "baudolo.seed.__main__:main"

|

||||

|

||||

[tool.setuptools]

|

||||

package-dir = { "" = "src" }

|

||||

|

||||

[tool.setuptools.packages.find]

|

||||

where = ["src"]

|

||||

exclude = ["tests*"]

|

||||

@@ -1,234 +0,0 @@

|

||||

#!/usr/bin/env bash

|

||||

set -euo pipefail

|

||||

|

||||

# -----------------------------------------------------------------------------

|

||||

# E2E runner using Docker-in-Docker (DinD) with debug-on-failure

|

||||

#

|

||||

# Debug toggles:

|

||||

# E2E_KEEP_ON_FAIL=1 -> keep DinD + volumes + network if tests fail

|

||||

# E2E_KEEP_VOLUMES=1 -> keep volumes even on success/cleanup

|

||||

# E2E_DEBUG_SHELL=1 -> open an interactive shell in the test container instead of running tests

|

||||

# E2E_ARTIFACTS_DIR=./artifacts

|

||||

# -----------------------------------------------------------------------------

|

||||

|

||||

NET="${E2E_NET:-baudolo-e2e-net}"

|

||||

DIND="${E2E_DIND_NAME:-baudolo-e2e-dind}"

|

||||

DIND_VOL="${E2E_DIND_VOL:-baudolo-e2e-dind-data}"

|

||||

E2E_TMP_VOL="${E2E_TMP_VOL:-baudolo-e2e-tmp}"

|

||||

|

||||

DIND_HOST="${E2E_DIND_HOST:-tcp://127.0.0.1:2375}"

|

||||

DIND_HOST_IN_NET="${E2E_DIND_HOST_IN_NET:-tcp://${DIND}:2375}"

|

||||

|

||||

IMG="${E2E_IMAGE:-baudolo:local}"

|

||||

RSYNC_IMG="${E2E_RSYNC_IMAGE:-ghcr.io/kevinveenbirkenbach/alpine-rsync}"

|

||||

|

||||

READY_TIMEOUT_SECONDS="${E2E_READY_TIMEOUT_SECONDS:-120}"

|

||||

ARTIFACTS_DIR="${E2E_ARTIFACTS_DIR:-./artifacts}"

|

||||

|

||||

KEEP_ON_FAIL="${E2E_KEEP_ON_FAIL:-0}"

|

||||

KEEP_VOLUMES="${E2E_KEEP_VOLUMES:-0}"

|

||||

DEBUG_SHELL="${E2E_DEBUG_SHELL:-0}"

|

||||

|

||||

FAILED=0

|

||||

TS="$(date +%Y%m%d%H%M%S)"

|

||||

|

||||

mkdir -p "${ARTIFACTS_DIR}"

|

||||

|

||||

log() { echo ">> $*"; }

|

||||

|

||||

dump_debug() {

|

||||

log "DEBUG: collecting diagnostics into ${ARTIFACTS_DIR}"

|

||||

|

||||

{

|

||||

echo "=== Host docker version ==="

|

||||

docker version || true

|

||||

echo

|

||||

echo "=== Host docker info ==="

|

||||

docker info || true

|

||||

echo

|

||||

echo "=== DinD reachable? (docker -H ${DIND_HOST} version) ==="

|

||||

docker -H "${DIND_HOST}" version || true

|

||||

echo

|

||||

} > "${ARTIFACTS_DIR}/debug-host-${TS}.txt" 2>&1 || true

|

||||

|

||||

# DinD logs

|

||||

docker logs --tail=5000 "${DIND}" > "${ARTIFACTS_DIR}/dind-logs-${TS}.txt" 2>&1 || true

|

||||

|

||||

# DinD state

|

||||

{

|

||||

echo "=== docker -H ps -a ==="

|

||||

docker -H "${DIND_HOST}" ps -a || true

|

||||

echo

|

||||

echo "=== docker -H images ==="

|

||||

docker -H "${DIND_HOST}" images || true

|

||||

echo

|

||||

echo "=== docker -H network ls ==="

|

||||

docker -H "${DIND_HOST}" network ls || true

|

||||

echo

|

||||

echo "=== docker -H volume ls ==="

|

||||

docker -H "${DIND_HOST}" volume ls || true

|

||||

echo

|

||||

echo "=== docker -H system df ==="

|

||||

docker -H "${DIND_HOST}" system df || true

|

||||

} > "${ARTIFACTS_DIR}/debug-dind-${TS}.txt" 2>&1 || true

|

||||

|

||||

# Try to capture recent events (best effort; might be noisy)

|

||||

docker -H "${DIND_HOST}" events --since 10m --until 0s \

|

||||

> "${ARTIFACTS_DIR}/dind-events-${TS}.txt" 2>&1 || true

|

||||

|

||||

# Dump shared /tmp content from the tmp volume:

|

||||

# We create a temporary container that mounts the volume, then tar its content.

|

||||

# (Does not rely on host filesystem paths.)

|

||||

log "DEBUG: archiving shared /tmp (volume ${E2E_TMP_VOL})"

|

||||

docker -H "${DIND_HOST}" run --rm \

|

||||

-v "${E2E_TMP_VOL}:/tmp" \

|

||||

alpine:3.20 \

|

||||

bash -lc 'cd /tmp && tar -czf /out.tar.gz . || true' \

|

||||

>/dev/null 2>&1 || true

|

||||

|

||||

# The above writes inside the container FS, not to host. So do it properly:

|

||||

# Use "docker cp" from a temp container.

|

||||

local tmpc="baudolo-e2e-tmpdump-${TS}"

|

||||

docker -H "${DIND_HOST}" rm -f "${tmpc}" >/dev/null 2>&1 || true

|

||||

docker -H "${DIND_HOST}" create --name "${tmpc}" -v "${E2E_TMP_VOL}:/tmp" alpine:3.20 \

|

||||

bash -lc 'cd /tmp && tar -czf /tmpdump.tar.gz . || true' >/dev/null

|

||||

docker -H "${DIND_HOST}" start -a "${tmpc}" >/dev/null 2>&1 || true

|

||||

docker -H "${DIND_HOST}" cp "${tmpc}:/tmpdump.tar.gz" "${ARTIFACTS_DIR}/e2e-tmp-${TS}.tar.gz" >/dev/null 2>&1 || true

|

||||

docker -H "${DIND_HOST}" rm -f "${tmpc}" >/dev/null 2>&1 || true

|

||||

|

||||

log "DEBUG: artifacts written:"

|

||||

ls -la "${ARTIFACTS_DIR}" | sed 's/^/ /' || true

|

||||

}

|

||||

|

||||

cleanup() {

|

||||

if [ "${FAILED}" -eq 1 ] && [ "${KEEP_ON_FAIL}" = "1" ]; then

|

||||

log "KEEP_ON_FAIL=1 and failure detected -> skipping cleanup."

|

||||

log "Next steps:"

|

||||

echo " - Inspect DinD logs: docker logs ${DIND} | less"

|

||||

echo " - Use DinD daemon: docker -H ${DIND_HOST} ps -a"

|

||||

echo " - Shared tmp vol: docker -H ${DIND_HOST} run --rm -v ${E2E_TMP_VOL}:/tmp alpine:3.20 ls -la /tmp"

|

||||

echo " - DinD docker root: docker -H ${DIND_HOST} run --rm -v ${DIND_VOL}:/var/lib/docker alpine:3.20 ls -la /var/lib/docker/volumes"

|

||||

return 0

|

||||

fi

|

||||

|

||||

log "Cleanup: stopping ${DIND} and removing network ${NET}"

|

||||

docker rm -f "${DIND}" >/dev/null 2>&1 || true

|

||||

docker network rm "${NET}" >/dev/null 2>&1 || true

|

||||

|

||||

if [ "${KEEP_VOLUMES}" != "1" ]; then

|

||||

docker volume rm -f "${DIND_VOL}" >/dev/null 2>&1 || true

|

||||

docker volume rm -f "${E2E_TMP_VOL}" >/dev/null 2>&1 || true

|

||||

else

|

||||

log "Keeping volumes (E2E_KEEP_VOLUMES=1): ${DIND_VOL}, ${E2E_TMP_VOL}"

|

||||

fi

|

||||

}

|

||||

trap cleanup EXIT INT TERM

|

||||

|

||||

log "Creating network ${NET} (if missing)"

|

||||

docker network inspect "${NET}" >/dev/null 2>&1 || docker network create "${NET}" >/dev/null

|

||||

|

||||

log "Removing old ${DIND} (if any)"

|

||||

docker rm -f "${DIND}" >/dev/null 2>&1 || true

|

||||

|

||||

log "(Re)creating DinD data volume ${DIND_VOL}"

|

||||

docker volume rm -f "${DIND_VOL}" >/dev/null 2>&1 || true

|

||||

docker volume create "${DIND_VOL}" >/dev/null

|

||||

|

||||

log "(Re)creating shared /tmp volume ${E2E_TMP_VOL}"

|

||||

docker volume rm -f "${E2E_TMP_VOL}" >/dev/null 2>&1 || true

|

||||

docker volume create "${E2E_TMP_VOL}" >/dev/null

|

||||

|

||||

log "Starting Docker-in-Docker daemon ${DIND}"

|

||||

docker run -d --privileged \

|

||||

--name "${DIND}" \

|

||||

--network "${NET}" \

|

||||

-e DOCKER_TLS_CERTDIR="" \

|

||||

-v "${DIND_VOL}:/var/lib/docker" \

|

||||

-v "${E2E_TMP_VOL}:/tmp" \

|

||||

-p 2375:2375 \

|

||||

docker:dind \

|

||||

--host=tcp://0.0.0.0:2375 \

|

||||

--tls=false >/dev/null

|

||||

|

||||

log "Waiting for DinD to be ready..."

|

||||

for i in $(seq 1 "${READY_TIMEOUT_SECONDS}"); do

|

||||

if docker -H "${DIND_HOST}" version >/dev/null 2>&1; then

|

||||

log "DinD is ready."

|

||||

break

|

||||

fi

|

||||

sleep 1

|

||||

if [ "${i}" -eq "${READY_TIMEOUT_SECONDS}" ]; then

|

||||

echo "ERROR: DinD did not become ready in time"

|

||||

docker logs --tail=200 "${DIND}" || true

|

||||

FAILED=1

|

||||

dump_debug || true

|

||||

exit 1

|

||||

fi

|

||||

done

|

||||

|

||||

log "Pre-pulling helper images in DinD..."

|

||||

log " - Pulling: ${RSYNC_IMG}"

|

||||

docker -H "${DIND_HOST}" pull "${RSYNC_IMG}"

|

||||

|

||||

log "Ensuring alpine exists in DinD (for debug helpers)"

|

||||

docker -H "${DIND_HOST}" pull alpine:3.20 >/dev/null

|

||||

|

||||

log "Loading ${IMG} image into DinD..."

|

||||

docker save "${IMG}" | docker -H "${DIND_HOST}" load >/dev/null

|

||||

|

||||

log "Running E2E tests inside DinD"

|

||||

set +e

|

||||

if [ "${DEBUG_SHELL}" = "1" ]; then

|

||||

log "E2E_DEBUG_SHELL=1 -> opening shell in test container"

|

||||

docker run --rm -it \

|

||||

--network "${NET}" \

|

||||

-e DOCKER_HOST="${DIND_HOST_IN_NET}" \

|

||||

-e E2E_RSYNC_IMAGE="${RSYNC_IMG}" \

|

||||

-v "${DIND_VOL}:/var/lib/docker:ro" \

|

||||

-v "${E2E_TMP_VOL}:/tmp" \

|

||||

"${IMG}" \

|

||||

bash -lc '

|

||||

set -e

|

||||

if [ ! -f /etc/machine-id ]; then

|

||||

mkdir -p /etc

|

||||

cat /proc/sys/kernel/random/uuid > /etc/machine-id

|

||||

fi

|

||||

echo ">> DOCKER_HOST=${DOCKER_HOST}"

|

||||

docker ps -a || true

|

||||

exec bash

|

||||

'

|

||||

rc=$?

|

||||

else

|

||||

docker run --rm \

|

||||

--network "${NET}" \

|

||||

-e DOCKER_HOST="${DIND_HOST_IN_NET}" \

|

||||

-e E2E_RSYNC_IMAGE="${RSYNC_IMG}" \

|

||||

-v "${DIND_VOL}:/var/lib/docker:ro" \

|

||||

-v "${E2E_TMP_VOL}:/tmp" \

|

||||

"${IMG}" \

|

||||

bash -lc '

|

||||

set -euo pipefail

|

||||

set -x

|

||||

export PYTHONUNBUFFERED=1

|

||||

|

||||

export TMPDIR=/tmp TMP=/tmp TEMP=/tmp

|

||||

|

||||

if [ ! -f /etc/machine-id ]; then

|

||||

mkdir -p /etc

|

||||

cat /proc/sys/kernel/random/uuid > /etc/machine-id

|

||||

fi

|

||||

|

||||

python -m unittest discover -t . -s tests/e2e -p "test_*.py" -v -f

|

||||

'

|

||||

rc=$?

|

||||

fi

|

||||

set -e

|

||||

|

||||

if [ "${rc}" -ne 0 ]; then

|

||||

FAILED=1

|

||||

echo "ERROR: E2E tests failed (exit code: ${rc})"

|

||||

dump_debug || true

|

||||

exit "${rc}"

|

||||

fi

|

||||

|

||||

log "E2E tests passed."

|

||||

@@ -1 +0,0 @@

|

||||

"""Baudolo backup package."""

|

||||

@@ -1,9 +0,0 @@

|

||||

#!/usr/bin/env python3

|

||||

|

||||

from __future__ import annotations

|

||||

|

||||

from .app import main

|

||||

|

||||

|

||||

if __name__ == "__main__":

|

||||

raise SystemExit(main())

|

||||

@@ -1,246 +0,0 @@

|

||||

from __future__ import annotations

|

||||

|

||||

import os

|

||||

import pathlib

|

||||

import sys

|

||||

from datetime import datetime

|

||||

|

||||

import pandas

|

||||

from dirval import create_stamp_file

|

||||

from pandas.errors import EmptyDataError

|

||||

|

||||

from .cli import parse_args

|

||||

from .compose import handle_docker_compose_services

|

||||

from .db import backup_database

|

||||

from .docker import (

|

||||

change_containers_status,

|

||||

containers_using_volume,

|

||||

docker_volume_names,

|

||||

get_image_info,

|

||||

has_image,

|

||||

)

|

||||

from .shell import execute_shell_command

|

||||

from .volume import backup_volume

|

||||

|

||||

|

||||

def get_machine_id() -> str:

|

||||

return execute_shell_command("sha256sum /etc/machine-id")[0][0:64]

|

||||

|

||||

|

||||

def stamp_directory(version_dir: str) -> None:

|

||||

"""

|

||||

Use dirval as a Python library to stamp the directory (no CLI dependency).

|

||||

"""

|

||||

create_stamp_file(version_dir)

|

||||

|

||||

|

||||

def create_version_directory(versions_dir: str, backup_time: str) -> str:

|

||||

version_dir = os.path.join(versions_dir, backup_time)

|

||||

pathlib.Path(version_dir).mkdir(parents=True, exist_ok=True)

|

||||

return version_dir

|

||||

|

||||

|

||||

def create_volume_directory(version_dir: str, volume_name: str) -> str:

|

||||

path = os.path.join(version_dir, volume_name)

|

||||

pathlib.Path(path).mkdir(parents=True, exist_ok=True)

|

||||

return path

|

||||

|

||||

|

||||

def is_image_ignored(container: str, images_no_backup_required: list[str]) -> bool:

|

||||

if not images_no_backup_required:

|

||||

return False

|

||||

img = get_image_info(container)

|

||||

return any(pat in img for pat in images_no_backup_required)

|

||||

|

||||

|

||||

def volume_is_fully_ignored(

|

||||

containers: list[str], images_no_backup_required: list[str]

|

||||

) -> bool:

|

||||

"""

|

||||

Skip file backup only if all containers linked to the volume are ignored.

|

||||

"""

|

||||

if not containers:

|

||||

return False

|

||||

return all(is_image_ignored(c, images_no_backup_required) for c in containers)

|

||||

|

||||

|

||||

def requires_stop(containers: list[str], images_no_stop_required: list[str]) -> bool:

|

||||

"""

|

||||

Stop is required if ANY container image is NOT in the whitelist patterns.

|

||||

"""

|

||||

for c in containers:

|

||||

img = get_image_info(c)

|

||||

if not any(pat in img for pat in images_no_stop_required):

|

||||

return True

|

||||

return False

|

||||

|

||||

|

||||

def backup_mariadb_or_postgres(

|

||||

*,

|

||||

container: str,

|

||||

volume_dir: str,

|

||||

databases_df: "pandas.DataFrame",

|

||||

database_containers: list[str],

|

||||

) -> tuple[bool, bool]:

|

||||

"""

|

||||

Returns (is_db_container, dumped_any)

|

||||

"""

|

||||

for img in ["mariadb", "postgres"]:

|

||||

if has_image(container, img):

|

||||

dumped = backup_database(

|

||||

container=container,

|

||||

volume_dir=volume_dir,

|

||||

db_type=img,

|

||||

databases_df=databases_df,

|

||||

database_containers=database_containers,

|

||||

)

|

||||

return True, dumped

|

||||

return False, False

|

||||

|

||||

|

||||

def _empty_databases_df() -> "pandas.DataFrame":

|

||||

"""

|

||||

Create an empty DataFrame with the expected schema for databases.csv.

|

||||

|

||||

This allows the backup to continue without DB dumps when the CSV is missing

|

||||

or empty (pandas EmptyDataError).

|

||||

"""

|

||||

return pandas.DataFrame(columns=["instance", "database", "username", "password"])

|

||||

|

||||

|

||||

def _load_databases_df(csv_path: str) -> "pandas.DataFrame":

|

||||

"""

|

||||

Load databases.csv robustly.

|

||||

|

||||

- Missing file -> warn, continue with empty df

|

||||

- Empty file -> warn, continue with empty df

|

||||

- Valid CSV -> return dataframe

|

||||

"""

|

||||

try:

|

||||

return pandas.read_csv(csv_path, sep=";", keep_default_na=False, dtype=str)

|

||||

except FileNotFoundError:

|

||||

print(

|

||||

f"WARNING: databases.csv not found: {csv_path}. Continuing without database dumps.",

|

||||

file=sys.stderr,

|

||||

flush=True,

|

||||

)

|

||||

return _empty_databases_df()

|

||||

except EmptyDataError:

|

||||

print(

|

||||

f"WARNING: databases.csv exists but is empty: {csv_path}. Continuing without database dumps.",

|

||||

file=sys.stderr,

|

||||

flush=True,

|

||||

)

|

||||

return _empty_databases_df()

|

||||

|

||||

|

||||

def _backup_dumps_for_volume(

|

||||

*,

|

||||

containers: list[str],

|

||||

vol_dir: str,

|

||||

databases_df: "pandas.DataFrame",

|

||||

database_containers: list[str],

|

||||

) -> tuple[bool, bool]:

|

||||

"""

|

||||

Returns (found_db_container, dumped_any)

|

||||

"""

|

||||

found_db = False

|

||||

dumped_any = False

|

||||

|

||||

for c in containers:

|

||||

is_db, dumped = backup_mariadb_or_postgres(

|

||||

container=c,

|

||||

volume_dir=vol_dir,

|

||||

databases_df=databases_df,

|

||||

database_containers=database_containers,

|

||||

)

|

||||

if is_db:

|

||||

found_db = True

|

||||

if dumped:

|

||||

dumped_any = True

|

||||

|

||||

return found_db, dumped_any

|

||||

|

||||

|

||||

def main() -> int:

|

||||

args = parse_args()

|

||||

|

||||

machine_id = get_machine_id()

|

||||

backup_time = datetime.now().strftime("%Y%m%d%H%M%S")

|

||||

|

||||

versions_dir = os.path.join(args.backups_dir, machine_id, args.repo_name)

|

||||

version_dir = create_version_directory(versions_dir, backup_time)

|

||||

|

||||

# IMPORTANT:

|

||||

# - keep_default_na=False prevents empty fields from turning into NaN

|

||||

# - dtype=str keeps all columns stable for comparisons/validation

|

||||

#

|

||||

# Robust behavior:

|

||||

# - if the file is missing or empty, we continue without DB dumps.

|

||||

databases_df = _load_databases_df(args.databases_csv)

|

||||

|

||||

print("💾 Start volume backups...", flush=True)

|

||||

|

||||

for volume_name in docker_volume_names():

|

||||

print(f"Start backup routine for volume: {volume_name}", flush=True)

|

||||

containers = containers_using_volume(volume_name)

|

||||

|

||||

# EARLY SKIP: if all linked containers are ignored, do not create any dirs

|

||||

if volume_is_fully_ignored(containers, args.images_no_backup_required):

|

||||

print(

|

||||

f"Skipping volume '{volume_name}' entirely (all linked containers are ignored).",

|

||||

flush=True,

|

||||

)

|

||||

continue

|

||||

|

||||

vol_dir = create_volume_directory(version_dir, volume_name)

|

||||

|

||||

found_db, dumped_any = _backup_dumps_for_volume(

|

||||

containers=containers,

|

||||

vol_dir=vol_dir,

|

||||

databases_df=databases_df,

|

||||

database_containers=args.database_containers,

|

||||

)

|

||||

|

||||

# dump-only-sql logic:

|

||||

if args.dump_only_sql:

|

||||

if found_db:

|

||||

if not dumped_any:

|

||||

print(

|

||||

f"WARNING: dump-only-sql requested but no DB dump was produced for DB volume '{volume_name}'. "

|

||||

"Falling back to file backup.",

|

||||

flush=True,

|

||||

)

|

||||

# fall through to file backup below

|

||||

else:

|

||||

# DB volume successfully dumped -> skip file backup

|

||||

continue

|

||||

# Non-DB volume -> always do file backup (fall through)

|

||||

|

||||

if args.everything:

|

||||

# "everything": always do pre-rsync, then stop + rsync again

|

||||

backup_volume(versions_dir, volume_name, vol_dir)

|

||||

change_containers_status(containers, "stop")

|

||||

backup_volume(versions_dir, volume_name, vol_dir)

|

||||

if not args.shutdown:

|

||||

change_containers_status(containers, "start")

|

||||

continue

|

||||

|

||||

# default: rsync, and if needed stop + rsync

|

||||

backup_volume(versions_dir, volume_name, vol_dir)

|

||||

if requires_stop(containers, args.images_no_stop_required):

|

||||

change_containers_status(containers, "stop")

|

||||

backup_volume(versions_dir, volume_name, vol_dir)

|

||||

if not args.shutdown:

|

||||

change_containers_status(containers, "start")

|

||||

|

||||

# Stamp the backup version directory using dirval (python lib)

|

||||

stamp_directory(version_dir)

|

||||

print("Finished volume backups.", flush=True)

|

||||

|

||||

print("Handling Docker Compose services...", flush=True)

|

||||

handle_docker_compose_services(

|

||||

args.compose_dir, args.docker_compose_hard_restart_required

|

||||

)

|

||||

|

||||

return 0

|

||||

@@ -1,82 +0,0 @@

|

||||

from __future__ import annotations

|

||||

|

||||

import argparse

|

||||

import os

|

||||

|

||||

|

||||

def parse_args() -> argparse.Namespace:

|

||||

dirname = os.path.dirname(__file__)

|

||||

default_databases_csv = os.path.join(dirname, "databases.csv")

|

||||

|

||||

p = argparse.ArgumentParser(description="Backup Docker volumes.")

|

||||

|

||||

p.add_argument(

|

||||

"--compose-dir",

|

||||

type=str,

|

||||

required=True,

|

||||

help="Path to the parent directory containing docker-compose setups",

|

||||

)

|

||||

p.add_argument(

|

||||

"--docker-compose-hard-restart-required",

|

||||

nargs="+",

|

||||

default=["mailu"],

|

||||

help="Compose dir names that require 'docker-compose down && up -d' (default: mailu)",

|

||||

)

|

||||

|

||||

p.add_argument(

|

||||

"--repo-name",

|

||||

default="backup-docker-to-local",

|

||||

help="Backup repo folder name under <backups-dir>/<machine-id>/ (default: git repo folder name)",

|

||||

)

|

||||

p.add_argument(

|

||||

"--databases-csv",

|

||||

default=default_databases_csv,

|

||||

help=f"Path to databases.csv (default: {default_databases_csv})",

|

||||

)

|

||||

p.add_argument(

|

||||

"--backups-dir",

|

||||

default="/var/lib/backup/",

|

||||

help="Backup root directory (default: /var/lib/backup/)",

|

||||

)

|

||||

|

||||

p.add_argument(

|

||||

"--database-containers",

|

||||

nargs="+",

|

||||

required=True,

|

||||

help="Container names treated as special instances for database backups",

|

||||

)

|

||||

p.add_argument(

|

||||

"--images-no-stop-required",

|

||||

nargs="+",

|

||||

required=True,

|

||||

help="Image name patterns for which containers should not be stopped during file backup",

|

||||

)

|

||||

p.add_argument(

|

||||

"--images-no-backup-required",

|

||||

nargs="+",

|

||||

default=[],

|

||||

help="Image name patterns for which no backup should be performed",

|

||||

)

|

||||

|

||||

p.add_argument(

|

||||

"--everything",

|

||||

action="store_true",

|

||||

help="Force file backup for all volumes and also execute database dumps (like old script)",

|

||||

)

|

||||

p.add_argument(

|

||||

"--shutdown",

|

||||

action="store_true",

|

||||

help="Do not restart containers after backup",

|

||||

)

|

||||

|

||||

p.add_argument(

|

||||

"--dump-only-sql",

|

||||

action="store_true",

|

||||

help=(

|

||||

"Create database dumps only for DB volumes. "

|

||||

"File backups are skipped for DB volumes if a dump succeeds, "

|

||||

"but non-DB volumes are still backed up. "

|

||||

"If a DB dump cannot be produced, baudolo falls back to a file backup."

|

||||

),

|

||||

)

|

||||

return p.parse_args()

|

||||

@@ -1,124 +0,0 @@

|

||||

from __future__ import annotations

|

||||

|

||||

import os

|

||||

import shutil

|

||||

import subprocess

|

||||

from pathlib import Path

|

||||

from typing import List, Optional

|

||||

|

||||

|

||||

def _detect_env_file(project_dir: Path) -> Optional[Path]:

|

||||

"""

|

||||

Detect Compose env file in a directory.

|

||||

Preference (same as Infinito.Nexus wrapper):

|

||||

1) <dir>/.env (file)

|

||||

2) <dir>/.env/env (file) (legacy layout)

|

||||

"""

|

||||

c1 = project_dir / ".env"

|

||||

if c1.is_file():

|

||||

return c1

|

||||

|

||||

c2 = project_dir / ".env" / "env"

|

||||

if c2.is_file():

|

||||

return c2

|

||||

|

||||

return None

|

||||

|

||||

|

||||

def _detect_compose_files(project_dir: Path) -> List[Path]:

|

||||

"""

|

||||

Detect Compose file stack in a directory (same as Infinito.Nexus wrapper).

|

||||

Always requires docker-compose.yml.

|

||||

Optionals:

|

||||

- docker-compose.override.yml

|

||||

- docker-compose.ca.override.yml

|

||||

"""

|

||||

base = project_dir / "docker-compose.yml"

|

||||

if not base.is_file():

|

||||

raise FileNotFoundError(f"Missing docker-compose.yml in: {project_dir}")

|

||||

|

||||

files = [base]

|

||||

|

||||

override = project_dir / "docker-compose.override.yml"

|

||||

if override.is_file():

|

||||

files.append(override)

|

||||

|

||||

ca_override = project_dir / "docker-compose.ca.override.yml"

|

||||

if ca_override.is_file():

|

||||

files.append(ca_override)

|

||||

|

||||

return files

|

||||

|

||||

|

||||

def _compose_wrapper_path() -> Optional[str]:

|

||||

"""

|

||||

Prefer the Infinito.Nexus compose wrapper if present.

|

||||

Equivalent to: `which compose`

|

||||

"""

|

||||

return shutil.which("compose")

|

||||

|

||||

|

||||

def _build_compose_cmd(project_dir: str, passthrough: List[str]) -> List[str]:

|

||||

"""

|

||||

Build the compose command for this project directory.

|

||||

|

||||

Behavior:

|

||||

- If `compose` wrapper exists: use it with --chdir (so it resolves -f/--env-file itself)

|

||||

- Else: use `docker compose` and replicate wrapper's file/env detection.

|

||||

"""

|

||||

pdir = Path(project_dir).resolve()

|

||||

|

||||

wrapper = _compose_wrapper_path()

|

||||

if wrapper:

|

||||

# Wrapper defaults project name to basename of --chdir.

|

||||

# "--" ensures wrapper stops parsing its own args.

|

||||

return [wrapper, "--chdir", str(pdir), "--", *passthrough]

|

||||

|

||||

# Fallback: pure docker compose, but mirror wrapper behavior.

|

||||

files = _detect_compose_files(pdir)

|

||||

env_file = _detect_env_file(pdir)

|

||||

|

||||

cmd: List[str] = ["docker", "compose"]

|

||||

for f in files:

|

||||

cmd += ["-f", str(f)]

|

||||

if env_file:

|

||||

cmd += ["--env-file", str(env_file)]

|

||||

|

||||

cmd += passthrough

|

||||

return cmd

|

||||

|

||||

|

||||

def hard_restart_docker_services(dir_path: str) -> None:

|

||||

print(f"Hard restart compose services in: {dir_path}", flush=True)

|

||||

|

||||

down_cmd = _build_compose_cmd(dir_path, ["down"])

|

||||

up_cmd = _build_compose_cmd(dir_path, ["up", "-d"])

|

||||

|

||||

print(">>> " + " ".join(down_cmd), flush=True)

|

||||

subprocess.run(down_cmd, check=True)

|

||||

|

||||

print(">>> " + " ".join(up_cmd), flush=True)

|

||||

subprocess.run(up_cmd, check=True)

|

||||

|

||||

|

||||

def handle_docker_compose_services(

|

||||

parent_directory: str, hard_restart_required: list[str]

|

||||

) -> None:

|

||||

for entry in os.scandir(parent_directory):

|

||||

if not entry.is_dir():

|

||||

continue

|

||||

|

||||

dir_path = entry.path

|

||||

name = os.path.basename(dir_path)

|

||||

compose_file = os.path.join(dir_path, "docker-compose.yml")

|

||||

|

||||

print(f"Checking directory: {dir_path}", flush=True)

|

||||

if not os.path.isfile(compose_file):

|

||||

print("No docker-compose.yml found. Skipping.", flush=True)

|

||||

continue

|

||||

|

||||

if name in hard_restart_required:

|

||||

print(f"{name}: hard restart required.", flush=True)

|

||||

hard_restart_docker_services(dir_path)

|

||||

else:

|

||||

print(f"{name}: no restart required.", flush=True)

|

||||

@@ -1,144 +0,0 @@

|

||||

from __future__ import annotations

|

||||

|

||||

import os

|

||||

import pathlib

|

||||

import re

|

||||

import logging

|

||||

from typing import Optional

|

||||

|

||||

import pandas

|

||||

|

||||

from .shell import BackupException, execute_shell_command

|

||||

|

||||

log = logging.getLogger(__name__)

|

||||

|

||||

|

||||

def get_instance(container: str, database_containers: list[str]) -> str:

|

||||

"""

|

||||

Derive a stable instance name from the container name.

|

||||

"""

|

||||

if container in database_containers:

|

||||

return container

|

||||

return re.split(r"(_|-)(database|db|postgres)", container)[0]

|

||||

|

||||

|

||||

def _validate_database_value(value: Optional[str], *, instance: str) -> str:

|

||||

"""

|

||||

Enforce explicit database semantics:

|

||||

|

||||

- "*" => dump ALL databases (cluster dump for Postgres)

|

||||

- "<name>" => dump exactly this database

|

||||

- "" => invalid configuration (would previously result in NaN / nan.backup.sql)

|

||||

"""

|

||||

v = (value or "").strip()

|

||||

if v == "":

|

||||

raise ValueError(

|

||||

f"Invalid databases.csv entry for instance '{instance}': "

|

||||

"column 'database' must be '*' or a concrete database name (not empty)."

|

||||

)

|

||||

return v

|

||||

|

||||

|

||||

def _atomic_write_cmd(cmd: str, out_file: str) -> None:

|

||||

"""

|

||||

Write dump output atomically:

|

||||

- write to <file>.tmp

|

||||

- rename to <file> only on success

|

||||

|

||||

This prevents empty or partial dump files from being treated as valid backups.

|

||||

"""

|

||||

tmp = f"{out_file}.tmp"

|

||||

execute_shell_command(f"{cmd} > {tmp}")

|

||||

execute_shell_command(f"mv {tmp} {out_file}")

|

||||

|

||||

|

||||

def fallback_pg_dumpall(

|

||||

container: str, username: str, password: str, out_file: str

|

||||

) -> None:

|

||||

"""

|

||||

Perform a full Postgres cluster dump using pg_dumpall.

|

||||

"""

|

||||

cmd = (

|

||||

f"PGPASSWORD={password} docker exec -i {container} "

|

||||

f"pg_dumpall -U {username} -h localhost"

|

||||

)

|

||||

_atomic_write_cmd(cmd, out_file)

|

||||

|

||||

|

||||

def backup_database(

|

||||

*,

|

||||

container: str,

|

||||

volume_dir: str,

|

||||

db_type: str,

|

||||

databases_df: "pandas.DataFrame",

|

||||

database_containers: list[str],

|

||||

) -> bool:

|

||||

"""

|

||||

Backup databases for a given DB container.

|

||||

|

||||

Returns True if at least one dump was produced.

|

||||

"""

|

||||

instance_name = get_instance(container, database_containers)

|

||||

|

||||

entries = databases_df[databases_df["instance"] == instance_name]

|

||||

if entries.empty:

|

||||

log.debug("No database entries for instance '%s'", instance_name)

|

||||

return False

|

||||

|

||||

out_dir = os.path.join(volume_dir, "sql")

|

||||

pathlib.Path(out_dir).mkdir(parents=True, exist_ok=True)

|

||||

|

||||

produced = False

|

||||

|

||||

for row in entries.itertuples(index=False):

|

||||

raw_db = getattr(row, "database", "")

|

||||

user = (getattr(row, "username", "") or "").strip()

|

||||

password = (getattr(row, "password", "") or "").strip()

|

||||

|

||||

db_value = _validate_database_value(raw_db, instance=instance_name)

|

||||

|

||||

# Explicit: dump ALL databases

|

||||

if db_value == "*":

|

||||

if db_type != "postgres":

|

||||

raise ValueError(

|

||||

f"databases.csv entry for instance '{instance_name}': "

|

||||

"'*' is currently only supported for Postgres."

|

||||

)

|

||||

|

||||

cluster_file = os.path.join(out_dir, f"{instance_name}.cluster.backup.sql")

|

||||

fallback_pg_dumpall(container, user, password, cluster_file)

|

||||

produced = True

|

||||

continue

|

||||

|

||||

# Concrete database dump

|

||||

db_name = db_value

|

||||

dump_file = os.path.join(out_dir, f"{db_name}.backup.sql")

|

||||

|

||||

if db_type == "mariadb":

|

||||

cmd = (

|

||||

f"docker exec {container} /usr/bin/mariadb-dump "

|

||||

f"-u {user} -p{password} {db_name}"

|

||||

)

|

||||

_atomic_write_cmd(cmd, dump_file)

|

||||

produced = True

|

||||

continue

|

||||

|

||||

if db_type == "postgres":

|

||||

try:

|

||||

cmd = (

|

||||

f"PGPASSWORD={password} docker exec -i {container} "

|

||||

f"pg_dump -U {user} -d {db_name} -h localhost"

|

||||

)

|

||||

_atomic_write_cmd(cmd, dump_file)

|

||||

produced = True

|

||||

except BackupException as e:

|

||||

# Explicit DB dump failed -> hard error

|

||||

raise BackupException(

|

||||

f"Postgres dump failed for instance '{instance_name}', "

|

||||

f"database '{db_name}'. This database was explicitly configured "

|

||||

"and therefore must succeed.\n"

|

||||

f"{e}"

|

||||

)

|

||||

continue

|

||||

|

||||

return produced

|

||||

@@ -1,45 +0,0 @@

|

||||

from __future__ import annotations

|

||||

|

||||

from .shell import execute_shell_command

|

||||

|

||||

|

||||

def get_image_info(container: str) -> str:

|

||||

return execute_shell_command(

|

||||

f"docker inspect --format '{{{{.Config.Image}}}}' {container}"

|

||||

)[0]

|

||||

|

||||

|

||||

def has_image(container: str, pattern: str) -> bool:

|

||||

"""Return True if container's image contains the pattern."""

|

||||

return pattern in get_image_info(container)

|

||||

|

||||

|

||||

def docker_volume_names() -> list[str]:

|

||||

return execute_shell_command("docker volume ls --format '{{.Name}}'")

|

||||

|

||||

|

||||

def containers_using_volume(volume_name: str) -> list[str]:

|

||||

return execute_shell_command(

|

||||

f"docker ps --filter volume=\"{volume_name}\" --format '{{{{.Names}}}}'"

|

||||

)

|

||||

|

||||

|

||||

def change_containers_status(containers: list[str], status: str) -> None:

|

||||

"""Stop or start a list of containers."""

|

||||