mirror of

https://github.com/kevinveenbirkenbach/docker-volume-backup.git

synced 2025-09-10 20:27:13 +02:00

Compare commits

84 Commits

b83e481d01

...

main

| Author | SHA1 | Date | |

|---|---|---|---|

| a538e537cb | |||

| 8f72d61300 | |||

| c754083cec | |||

| 84d0fd6346 | |||

| 627187cecb | |||

| 978e153723 | |||

| 2bf2b0798e | |||

| 8196a0206b | |||

| c4cbb290b3 | |||

| 2d2376eac8 | |||

| 8c4ae60a6a | |||

| 18d6136de0 | |||

| 3ed89a59a8 | |||

| 7d3f0a3ae3 | |||

| 5762754ed7 | |||

| 556cb17433 | |||

| 2e2c8131c4 | |||

| 5005d577cc | |||

| 327b666237 | |||

| a7c6fa861a | |||

| f6c57be1b7 | |||

| 9d990a728d | |||

| a355f34e6e | |||

| f847c8dd74 | |||

| 3e225b0317 | |||

| 6537626d77 | |||

| da7e5cc9be | |||

| 69a1ea30aa | |||

| e9588b0e31 | |||

| 42566815c4 | |||

| 8bc2b068ff | |||

| 25d428fc9c | |||

| 0077efa63c | |||

| 9d8e80f793 | |||

| d2b699c271 | |||

| b7dcb17fd5 | |||

| 7f6f5f6dc8 | |||

| 75d48fb3e9 | |||

| bb3d20c424 | |||

| f057104a65 | |||

| 7fe1886ff9 | |||

| 35e28f31d2 | |||

| 15a1f17184 | |||

| ace1a70488 | |||

| d537393da8 | |||

| 2b716e5d90 | |||

| 7702b17a9d | |||

| 489b5796b7 | |||

| bf9986f282 | |||

| e2e62c5835 | |||

| 4388e09937 | |||

| 31133f251e | |||

| 850fc3bf0c | |||

| 00fd102f81 | |||

| f369a13d37 | |||

| f505be35d3 | |||

| 49c442b299 | |||

| 0322eee107 | |||

| 9a5b544e0b | |||

| 15d7406b7e | |||

| 9dd58f3ee4 | |||

| 7f383fcce2 | |||

| a72753921a | |||

| 407eddc2c3 | |||

| 3fedf49f4e | |||

| fb2e1df233 | |||

| 47922f53fa | |||

| 162b3eec06 | |||

| e0fc263dcb | |||

| 581ff501fc | |||

| 540797f244 | |||

| 7853283ef3 | |||

| 5e91e298c4 | |||

| de59646fc0 | |||

| bcc8a7fb00 | |||

| 8c4785dfe6 | |||

| d4799af904 | |||

| d1f942bc58 | |||

| 397e242e5b | |||

| b06317ad48 | |||

| 79f4cb5e7f | |||

| 50db914c36 | |||

| 02062c7d49 | |||

| a1c33c1747 |

7

.github/FUNDING.yml

vendored

Normal file

7

.github/FUNDING.yml

vendored

Normal file

@@ -0,0 +1,7 @@

|

|||||||

|

github: kevinveenbirkenbach

|

||||||

|

|

||||||

|

patreon: kevinveenbirkenbach

|

||||||

|

|

||||||

|

buy_me_a_coffee: kevinveenbirkenbach

|

||||||

|

|

||||||

|

custom: https://s.veen.world/paypaldonate

|

||||||

1

.gitignore

vendored

1

.gitignore

vendored

@@ -1 +1,2 @@

|

|||||||

databases.csv

|

databases.csv

|

||||||

|

__pycache__

|

||||||

4

Makefile

Normal file

4

Makefile

Normal file

@@ -0,0 +1,4 @@

|

|||||||

|

.PHONY: test

|

||||||

|

|

||||||

|

test:

|

||||||

|

python -m unittest discover -s tests/unit -p "test_*.py"

|

||||||

118

README.md

118

README.md

@@ -1,67 +1,101 @@

|

|||||||

# Backup Docker Volumes to Local

|

# Backup Docker Volumes to Local (baudolo) 📦🔄

|

||||||

[](./LICENSE.txt)

|

[](https://github.com/sponsors/kevinveenbirkenbach) [](https://www.patreon.com/c/kevinveenbirkenbach) [](https://buymeacoffee.com/kevinveenbirkenbach) [](https://s.veen.world/paypaldonate)

|

||||||

|

|

||||||

## goal

|

|

||||||

This script backups all docker-volumes with the help of rsync.

|

|

||||||

|

|

||||||

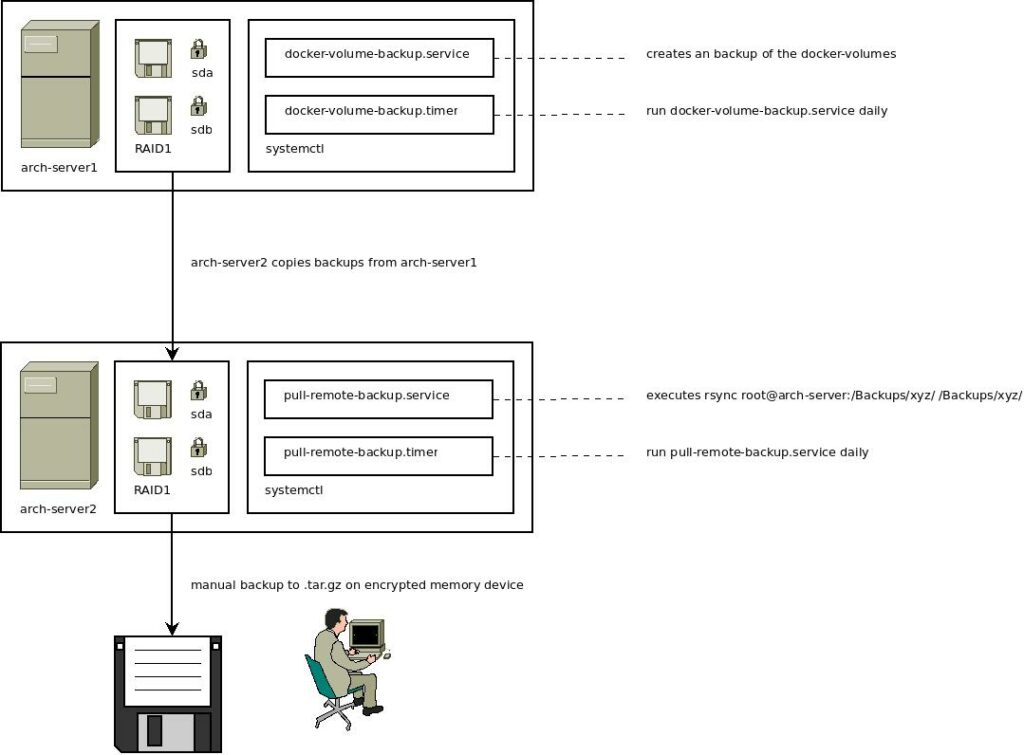

## scheme

|

**Backup Docker Volumes to Local** is a set of Python and shell scripts that enable you to perform incremental backups of all your Docker volumes using rsync. It is designed to integrate seamlessly with [Kevin's Package Manager](https://github.com/kevinveenbirkenbach/package-manager) under the alias **baudolo**, making it easy to install and manage. The tool supports both file and database recoveries with a clear, automated backup scheme.

|

||||||

It is part of the following scheme:

|

|

||||||

|

|

||||||

Further information you will find [in this blog post](https://www.veen.world/2020/12/26/how-i-backup-dedicated-root-servers/).

|

|

||||||

|

|

||||||

## Backup all volumes

|

[](https://www.gnu.org/licenses/agpl-3.0) [](https://www.docker.com) [](https://www.python.org) [](https://github.com/kevinveenbirkenbach/backup-docker-to-local/stargazers)

|

||||||

Execute:

|

|

||||||

|

## 🎯 Goal

|

||||||

|

|

||||||

|

This project automates the backup of Docker volumes using incremental backups (rsync) and supports recovering both files and database dumps (MariaDB/PostgreSQL). A robust directory stamping mechanism ensures data integrity, and the tool also handles restarting Docker Compose services when necessary.

|

||||||

|

|

||||||

|

## 🚀 Features

|

||||||

|

|

||||||

|

- **Incremental Backups:** Uses rsync with `--link-dest` for efficient, versioned backups.

|

||||||

|

- **Database Backup Support:** Backs up MariaDB and PostgreSQL databases from running containers.

|

||||||

|

- **Volume Recovery:** Provides scripts to recover volumes and databases from backups.

|

||||||

|

- **Docker Compose Integration:** Option to automatically restart Docker Compose services after backup.

|

||||||

|

- **Flexible Configuration:** Easily integrated with your Docker environment with minimal setup.

|

||||||

|

- **Comprehensive Logging:** Detailed command output and error handling for safe operations.

|

||||||

|

|

||||||

|

## 🛠 Requirements

|

||||||

|

|

||||||

|

- **Linux Operating System** (with Docker installed) 🐧

|

||||||

|

- **Python 3.x** 🐍

|

||||||

|

- **Docker & Docker Compose** 🔧

|

||||||

|

- **rsync** installed on your system

|

||||||

|

|

||||||

|

## 📥 Installation

|

||||||

|

|

||||||

|

You can install **Backup Docker Volumes to Local** easily via [Kevin's Package Manager](https://github.com/kevinveenbirkenbach/package-manager) using the alias **baudolo**:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

pkgmgr install baudolo

|

||||||

|

```

|

||||||

|

|

||||||

|

Alternatively, clone the repository directly:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

git clone https://github.com/kevinveenbirkenbach/backup-docker-to-local.git

|

||||||

|

cd backup-docker-to-local

|

||||||

|

```

|

||||||

|

|

||||||

|

## 🚀 Usage

|

||||||

|

|

||||||

|

### Backup All Volumes

|

||||||

|

|

||||||

|

To backup all Docker volumes, simply run:

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

./backup-docker-to-local.sh

|

./backup-docker-to-local.sh

|

||||||

```

|

```

|

||||||

|

|

||||||

## Recover

|

### Recovery

|

||||||

|

|

||||||

### database

|

#### Recover Volume Files

|

||||||

```bash

|

|

||||||

docker exec -i mysql_container mysql -uroot -psecret database < db.sql

|

|

||||||

```

|

|

||||||

|

|

||||||

### volume

|

|

||||||

Execute:

|

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

|

|

||||||

bash ./recover-docker-from-local.sh "{{volume_name}}" "$(sha256sum /etc/machine-id | head -c 64)" "{{version_to_recover}}"

|

bash ./recover-docker-from-local.sh "{{volume_name}}" "$(sha256sum /etc/machine-id | head -c 64)" "{{version_to_recover}}"

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

### Database

|

#### Recover Database

|

||||||

|

|

||||||

## Debug

|

For example, to recover a MySQL/MariaDB database:

|

||||||

To checkout what's going on in the mount container type in the following command:

|

|

||||||

|

```bash

|

||||||

|

docker exec -i mysql_container mysql -uroot -psecret database < db.sql

|

||||||

|

```

|

||||||

|

|

||||||

|

#### Debug Mode

|

||||||

|

|

||||||

|

To inspect what’s happening inside a container:

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

docker run -it --entrypoint /bin/sh --rm --volumes-from {{container_name}} -v /Backups/:/Backups/ kevinveenbirkenbach/alpine-rsync

|

docker run -it --entrypoint /bin/sh --rm --volumes-from {{container_name}} -v /Backups/:/Backups/ kevinveenbirkenbach/alpine-rsync

|

||||||

```

|

```

|

||||||

|

|

||||||

## Setup

|

## 🔍 Backup Scheme

|

||||||

Install pandas

|

|

||||||

|

|

||||||

## Author

|

The backup mechanism uses incremental backups with rsync and stamps directories with a unique hash. For more details on the backup scheme, check out [this blog post](https://blog.veen.world/blog/2020/12/26/how-i-backup-dedicated-root-servers/).

|

||||||

|

|

||||||

|

|

||||||

Kevin Veen-Birkenbach

|

## 👨💻 Author

|

||||||

- 📧 Email: [kevin@veen.world](mailto:kevin@veen.world)

|

|

||||||

- 🌍 Website: [https://www.veen.world/](https://www.veen.world/)

|

|

||||||

|

|

||||||

## License

|

**Kevin Veen-Birkenbach**

|

||||||

|

- 📧 [kevin@veen.world](mailto:kevin@veen.world)

|

||||||

|

- 🌐 [https://www.veen.world/](https://www.veen.world/)

|

||||||

|

|

||||||

This project is licensed under the GNU Affero General Public License v3.0. The full license text is available in the `LICENSE` file of this repository.

|

## 📜 License

|

||||||

|

|

||||||

## More information

|

This project is licensed under the **GNU Affero General Public License v3.0**. See the [LICENSE](./LICENSE) file for details.

|

||||||

- https://docs.docker.com/storage/volumes/

|

|

||||||

- https://blog.ssdnodes.com/blog/docker-backup-volumes/

|

## 🔗 More Information

|

||||||

- https://www.baculasystems.com/blog/docker-backup-containers/

|

|

||||||

- https://gist.github.com/spalladino/6d981f7b33f6e0afe6bb

|

- [Docker Volumes Documentation](https://docs.docker.com/storage/volumes/)

|

||||||

- https://stackoverflow.com/questions/26331651/how-can-i-backup-a-docker-container-with-its-data-volumes

|

- [Docker Backup Volumes Blog](https://blog.ssdnodes.com/blog/docker-backup-volumes/)

|

||||||

- https://netfuture.ch/2013/08/simple-versioned-timemachine-like-backup-using-rsync/

|

- [Backup Strategies](https://en.wikipedia.org/wiki/Incremental_backup#Incremental)

|

||||||

- https://zwischenzugs.com/2016/08/29/bash-to-python-converter/

|

|

||||||

- https://en.wikipedia.org/wiki/Incremental_backup#Incremental

|

---

|

||||||

- https://unix.stackexchange.com/questions/567837/linux-backup-utility-for-incremental-backups

|

|

||||||

- https://chat.openai.com/share/6d10f143-3f7c-4feb-8ae9-5644c3433a65

|

Happy Backing Up! 🚀🔐

|

||||||

|

|||||||

2

Todo.md

Normal file

2

Todo.md

Normal file

@@ -0,0 +1,2 @@

|

|||||||

|

# Todo

|

||||||

|

- Verify that restore backup is correct implemented

|

||||||

0

__init__.py

Normal file

0

__init__.py

Normal file

@@ -1,121 +1,385 @@

|

|||||||

#!/bin/python

|

#!/bin/python

|

||||||

# Backups volumes of running containers

|

# Backups volumes of running containers

|

||||||

#

|

|

||||||

import subprocess

|

import subprocess

|

||||||

import os

|

import os

|

||||||

import re

|

import re

|

||||||

import pathlib

|

import pathlib

|

||||||

import pandas

|

import pandas

|

||||||

from datetime import datetime

|

from datetime import datetime

|

||||||

|

import argparse

|

||||||

|

|

||||||

class RsyncCode24Exception(Exception):

|

class BackupException(Exception):

|

||||||

"""Exception for rsync error code 24."""

|

"""Generic exception for backup errors."""

|

||||||

"""rsync warning: some files vanished before they could be transferred"""

|

|

||||||

pass

|

pass

|

||||||

|

|

||||||

def bash(command):

|

def execute_shell_command(command):

|

||||||

|

"""Execute a shell command and return its output."""

|

||||||

print(command)

|

print(command)

|

||||||

process = subprocess.Popen([command], stdout=subprocess.PIPE, stderr=subprocess.PIPE, shell=True)

|

process = subprocess.Popen(

|

||||||

|

[command],

|

||||||

|

stdout=subprocess.PIPE,

|

||||||

|

stderr=subprocess.PIPE,

|

||||||

|

shell=True

|

||||||

|

)

|

||||||

out, err = process.communicate()

|

out, err = process.communicate()

|

||||||

stdout = out.splitlines()

|

if process.returncode != 0:

|

||||||

stderr = err.decode("utf-8")

|

raise BackupException(

|

||||||

output = [line.decode("utf-8") for line in stdout]

|

f"Error in command: {command}\n"

|

||||||

|

f"Output: {out}\nError: {err}\n"

|

||||||

|

f"Exit code: {process.returncode}"

|

||||||

|

)

|

||||||

|

return [line.decode("utf-8") for line in out.splitlines()]

|

||||||

|

|

||||||

exitcode = process.wait()

|

def create_version_directory():

|

||||||

if exitcode != 0:

|

"""Create necessary directories for backup."""

|

||||||

print(f"Error in command: {command}\nOutput: {out}\nError: {err}\nExit code: {exitcode}")

|

version_dir = os.path.join(VERSIONS_DIR, BACKUP_TIME)

|

||||||

|

pathlib.Path(version_dir).mkdir(parents=True, exist_ok=True)

|

||||||

|

return version_dir

|

||||||

|

|

||||||

if "rsync" in command and exitcode == 24:

|

def get_machine_id():

|

||||||

raise RsyncCode24Exception(f"rsync error code 24 encountered: {stderr}")

|

"""Get the machine identifier."""

|

||||||

|

return execute_shell_command("sha256sum /etc/machine-id")[0][0:64]

|

||||||

|

|

||||||

raise Exception("Exit code is greater than 0")

|

### GLOBAL CONFIGURATION ###

|

||||||

|

|

||||||

return output

|

# Container names treated as special instances for database backups

|

||||||

|

DATABASE_CONTAINERS = ['central-mariadb', 'central-postgres']

|

||||||

|

|

||||||

def print_bash(command):

|

# Images which do not require container stop for file backups

|

||||||

output = bash(command)

|

IMAGES_NO_STOP_REQUIRED = []

|

||||||

print(list_to_string(output))

|

|

||||||

return output

|

|

||||||

|

|

||||||

|

# Images to skip entirely

|

||||||

|

IMAGES_NO_BACKUP_REQUIRED = []

|

||||||

|

|

||||||

def list_to_string(list):

|

# Compose dirs requiring hard restart

|

||||||

return str(' '.join(list))

|

DOCKER_COMPOSE_HARD_RESTART_REQUIRED = ['mailu']

|

||||||

|

|

||||||

|

# DEFINE CONSTANTS

|

||||||

|

DIRNAME = os.path.dirname(__file__)

|

||||||

|

SCRIPTS_DIRECTORY = pathlib.Path(os.path.realpath(__file__)).parent.parent

|

||||||

|

DATABASES = pandas.read_csv(os.path.join(DIRNAME, "databases.csv"), sep=";")

|

||||||

|

REPOSITORY_NAME = os.path.basename(DIRNAME)

|

||||||

|

MACHINE_ID = get_machine_id()

|

||||||

|

BACKUPS_DIR = '/Backups/'

|

||||||

|

VERSIONS_DIR = os.path.join(BACKUPS_DIR, MACHINE_ID, REPOSITORY_NAME)

|

||||||

|

BACKUP_TIME = datetime.now().strftime("%Y%m%d%H%M%S")

|

||||||

|

VERSION_DIR = create_version_directory()

|

||||||

|

|

||||||

print('start backup routine...')

|

def get_instance(container):

|

||||||

|

"""Extract the database instance name based on container name."""

|

||||||

dirname = os.path.dirname(__file__)

|

if container in DATABASE_CONTAINERS:

|

||||||

repository_name = os.path.basename(dirname)

|

instance_name = container

|

||||||

# identifier of this backups

|

|

||||||

machine_id = bash("sha256sum /etc/machine-id")[0][0:64]

|

|

||||||

# Folder in which all Backups are stored

|

|

||||||

backups_dir = '/Backups/'

|

|

||||||

# Folder in which the versions off docker volume backups are stored

|

|

||||||

versions_dir = backups_dir + machine_id + "/" + repository_name + "/"

|

|

||||||

# Time when the backup started

|

|

||||||

backup_time = datetime.now().strftime("%Y%m%d%H%M%S")

|

|

||||||

# Folder containing the current version

|

|

||||||

version_dir = versions_dir + backup_time + "/"

|

|

||||||

|

|

||||||

# Create folder to store version in

|

|

||||||

pathlib.Path(version_dir).mkdir(parents=True, exist_ok=True)

|

|

||||||

|

|

||||||

print('start volume backups...')

|

|

||||||

print('load connection data...')

|

|

||||||

databases = pandas.read_csv(dirname + "/databases.csv", sep=";")

|

|

||||||

volume_names = bash("docker volume ls --format '{{.Name}}'")

|

|

||||||

for volume_name in volume_names:

|

|

||||||

print('start backup routine for volume: ' + volume_name)

|

|

||||||

containers = bash("docker ps --filter volume=\"" + volume_name + "\" --format '{{.Names}}'")

|

|

||||||

if len(containers) == 0:

|

|

||||||

print('skipped due to no running containers using this volume.')

|

|

||||||

else:

|

else:

|

||||||

container = containers[0]

|

instance_name = re.split("(_|-)(database|db|postgres)", container)[0]

|

||||||

# Folder to which the volumes are copied

|

print(f"Extracted instance name: {instance_name}")

|

||||||

volume_destination_dir = version_dir + volume_name

|

return instance_name

|

||||||

# Database name

|

|

||||||

database_name = re.split("(_|-)(database|db)", container)[0]

|

def stamp_directory():

|

||||||

# Entries with database login data concerning this container

|

"""Stamp a directory using directory-validator."""

|

||||||

databases_entries = databases.loc[databases['database'] == database_name]

|

stamp_command = (

|

||||||

# Exception for akaunting due to fast implementation

|

f"python {SCRIPTS_DIRECTORY}/directory-validator/"

|

||||||

if len(databases_entries) == 1 and container != 'akaunting':

|

f"directory-validator.py --stamp {VERSION_DIR}"

|

||||||

print("Backup database...")

|

)

|

||||||

mysqldump_destination_dir = volume_destination_dir + "/sql"

|

|

||||||

mysqldump_destination_file = mysqldump_destination_dir + "/backup.sql"

|

|

||||||

pathlib.Path(mysqldump_destination_dir).mkdir(parents=True, exist_ok=True)

|

|

||||||

database_entry = databases_entries.iloc[0]

|

|

||||||

database_backup_command = "docker exec " + container + " /usr/bin/mariadb-dump -u " + database_entry["username"] + " -p" + database_entry["password"] + " " + database_entry["database"] + " > " + mysqldump_destination_file

|

|

||||||

print_bash(database_backup_command)

|

|

||||||

print("Backup files...")

|

|

||||||

files_rsync_destination_path = volume_destination_dir + "/files"

|

|

||||||

pathlib.Path(files_rsync_destination_path).mkdir(parents=True, exist_ok=True)

|

|

||||||

versions = os.listdir(versions_dir)

|

|

||||||

versions.sort(reverse=True)

|

|

||||||

if len(versions) > 1:

|

|

||||||

last_version = versions[1]

|

|

||||||

last_version_files_dir = versions_dir + last_version + "/" + volume_name + "/files"

|

|

||||||

if os.path.isdir(last_version_files_dir):

|

|

||||||

link_dest_parameter="--link-dest='" + last_version_files_dir + "' "

|

|

||||||

else:

|

|

||||||

print("No previous version exists in path "+ last_version_files_dir + ".")

|

|

||||||

link_dest_parameter=""

|

|

||||||

else:

|

|

||||||

print("No previous version exists in path "+ last_version_files_dir + ".")

|

|

||||||

link_dest_parameter=""

|

|

||||||

source_dir = "/var/lib/docker/volumes/" + volume_name + "/_data/"

|

|

||||||

rsync_command = "rsync -abP --delete --delete-excluded " + link_dest_parameter + source_dir + " " + files_rsync_destination_path

|

|

||||||

try:

|

try:

|

||||||

print_bash(rsync_command)

|

execute_shell_command(stamp_command)

|

||||||

except RsyncCode24Exception:

|

print(f"Successfully stamped directory: {VERSION_DIR}")

|

||||||

print("Ignoring rsync error code 24, proceeding with the next command.")

|

except BackupException as e:

|

||||||

print("stop containers...")

|

print(f"Error stamping directory {VERSION_DIR}: {e}")

|

||||||

print("Backup data after container is stopped...")

|

exit(1)

|

||||||

print_bash("docker stop " + list_to_string(containers))

|

|

||||||

print_bash(rsync_command)

|

def backup_database(container, volume_dir, db_type):

|

||||||

print("start containers...")

|

"""Backup database (MariaDB or PostgreSQL) if applicable."""

|

||||||

print_bash("docker start " + list_to_string(containers))

|

print(f"Starting database backup for {container} using {db_type}...")

|

||||||

print("end backup routine for volume:" + volume_name)

|

instance_name = get_instance(container)

|

||||||

print('finished volume backups.')

|

database_entries = DATABASES.loc[DATABASES['instance'] == instance_name]

|

||||||

print('restart docker service...')

|

if database_entries.empty:

|

||||||

print_bash("systemctl restart docker")

|

raise BackupException(f"No entry found for instance '{instance_name}'")

|

||||||

print('finished backup routine.')

|

for database_entry in database_entries.iloc:

|

||||||

|

database_name = database_entry['database']

|

||||||

|

database_username = database_entry['username']

|

||||||

|

database_password = database_entry['password']

|

||||||

|

backup_destination_dir = os.path.join(volume_dir, "sql")

|

||||||

|

pathlib.Path(backup_destination_dir).mkdir(parents=True, exist_ok=True)

|

||||||

|

backup_destination_file = os.path.join(

|

||||||

|

backup_destination_dir,

|

||||||

|

f"{database_name}.backup.sql"

|

||||||

|

)

|

||||||

|

if db_type == 'mariadb':

|

||||||

|

cmd = (

|

||||||

|

f"docker exec {container} "

|

||||||

|

f"/usr/bin/mariadb-dump -u {database_username} "

|

||||||

|

f"-p{database_password} {database_name} > {backup_destination_file}"

|

||||||

|

)

|

||||||

|

execute_shell_command(cmd)

|

||||||

|

if db_type == 'postgres':

|

||||||

|

cluster_file = os.path.join(

|

||||||

|

backup_destination_dir,

|

||||||

|

f"{instance_name}.cluster.backup.sql"

|

||||||

|

)

|

||||||

|

if not database_name:

|

||||||

|

fallback_pg_dumpall(

|

||||||

|

container,

|

||||||

|

database_username,

|

||||||

|

database_password,

|

||||||

|

cluster_file

|

||||||

|

)

|

||||||

|

return

|

||||||

|

try:

|

||||||

|

if database_password:

|

||||||

|

cmd = (

|

||||||

|

f"PGPASSWORD={database_password} docker exec -i {container} "

|

||||||

|

f"pg_dump -U {database_username} -d {database_name} "

|

||||||

|

f"-h localhost > {backup_destination_file}"

|

||||||

|

)

|

||||||

|

else:

|

||||||

|

cmd = (

|

||||||

|

f"docker exec -i {container} pg_dump -U {database_username} "

|

||||||

|

f"-d {database_name} -h localhost --no-password "

|

||||||

|

f"> {backup_destination_file}"

|

||||||

|

)

|

||||||

|

execute_shell_command(cmd)

|

||||||

|

except BackupException as e:

|

||||||

|

print(f"pg_dump failed: {e}")

|

||||||

|

print(f"Falling back to pg_dumpall for instance '{instance_name}'")

|

||||||

|

fallback_pg_dumpall(

|

||||||

|

container,

|

||||||

|

database_username,

|

||||||

|

database_password,

|

||||||

|

cluster_file

|

||||||

|

)

|

||||||

|

print(f"Database backup for database {container} completed.")

|

||||||

|

|

||||||

|

def get_last_backup_dir(volume_name, current_backup_dir):

|

||||||

|

"""Get the most recent backup directory for the specified volume."""

|

||||||

|

versions = sorted(os.listdir(VERSIONS_DIR), reverse=True)

|

||||||

|

for version in versions:

|

||||||

|

backup_dir = os.path.join(

|

||||||

|

VERSIONS_DIR, version, volume_name, "files", ""

|

||||||

|

)

|

||||||

|

if backup_dir != current_backup_dir and os.path.isdir(backup_dir):

|

||||||

|

return backup_dir

|

||||||

|

print(f"No previous backups available for volume: {volume_name}")

|

||||||

|

return None

|

||||||

|

|

||||||

|

def getStoragePath(volume_name):

|

||||||

|

path = execute_shell_command(

|

||||||

|

f"docker volume inspect --format '{{{{ .Mountpoint }}}}' {volume_name}"

|

||||||

|

)[0]

|

||||||

|

return f"{path}/"

|

||||||

|

|

||||||

|

def getFileRsyncDestinationPath(volume_dir):

|

||||||

|

path = os.path.join(volume_dir, "files")

|

||||||

|

return f"{path}/"

|

||||||

|

|

||||||

|

def fallback_pg_dumpall(container, username, password, backup_destination_file):

|

||||||

|

"""Fallback function to run pg_dumpall if pg_dump fails or no DB is defined."""

|

||||||

|

print(f"Running pg_dumpall for container '{container}'...")

|

||||||

|

cmd = (

|

||||||

|

f"PGPASSWORD={password} docker exec -i {container} "

|

||||||

|

f"pg_dumpall -U {username} -h localhost > {backup_destination_file}"

|

||||||

|

)

|

||||||

|

execute_shell_command(cmd)

|

||||||

|

|

||||||

|

def backup_volume(volume_name, volume_dir):

|

||||||

|

"""Perform incremental file backup of a Docker volume."""

|

||||||

|

try:

|

||||||

|

print(f"Starting backup routine for volume: {volume_name}")

|

||||||

|

dest = getFileRsyncDestinationPath(volume_dir)

|

||||||

|

pathlib.Path(dest).mkdir(parents=True, exist_ok=True)

|

||||||

|

last = get_last_backup_dir(volume_name, dest)

|

||||||

|

link_dest = f"--link-dest='{last}'" if last else ""

|

||||||

|

source = getStoragePath(volume_name)

|

||||||

|

cmd = (

|

||||||

|

f"rsync -abP --delete --delete-excluded "

|

||||||

|

f"{link_dest} {source} {dest}"

|

||||||

|

)

|

||||||

|

execute_shell_command(cmd)

|

||||||

|

except BackupException as e:

|

||||||

|

if "file has vanished" in str(e):

|

||||||

|

print("Warning: Some files vanished before transfer. Continuing.")

|

||||||

|

else:

|

||||||

|

raise

|

||||||

|

print(f"Backup routine for volume: {volume_name} completed.")

|

||||||

|

|

||||||

|

def get_image_info(container):

|

||||||

|

return execute_shell_command(

|

||||||

|

f"docker inspect --format '{{{{.Config.Image}}}}' {container}"

|

||||||

|

)

|

||||||

|

|

||||||

|

def has_image(container, image):

|

||||||

|

"""Check if the container is using the image"""

|

||||||

|

info = get_image_info(container)[0]

|

||||||

|

return image in info

|

||||||

|

|

||||||

|

def change_containers_status(containers, status):

|

||||||

|

"""Stop or start a list of containers."""

|

||||||

|

if containers:

|

||||||

|

names = ' '.join(containers)

|

||||||

|

print(f"{status.capitalize()} containers: {names}...")

|

||||||

|

execute_shell_command(f"docker {status} {names}")

|

||||||

|

else:

|

||||||

|

print(f"No containers to {status}.")

|

||||||

|

|

||||||

|

def is_image_whitelisted(container, images):

|

||||||

|

"""

|

||||||

|

Return True if the container's image matches any of the whitelist patterns.

|

||||||

|

Also prints out the image name and the match result.

|

||||||

|

"""

|

||||||

|

# fetch the image (e.g. "nextcloud:23-fpm-alpine")

|

||||||

|

info = get_image_info(container)[0]

|

||||||

|

|

||||||

|

# check against each pattern

|

||||||

|

whitelisted = any(pattern in info for pattern in images)

|

||||||

|

|

||||||

|

# log the result

|

||||||

|

print(f"Container {container!r} → image {info!r} → whitelisted? {whitelisted}", flush=True)

|

||||||

|

|

||||||

|

return whitelisted

|

||||||

|

|

||||||

|

def is_container_stop_required(containers):

|

||||||

|

"""

|

||||||

|

Check if any of the containers are using images that are not whitelisted.

|

||||||

|

If so, print them out and return True; otherwise return False.

|

||||||

|

"""

|

||||||

|

# Find all containers whose image isn’t on the whitelist

|

||||||

|

not_whitelisted = [

|

||||||

|

c for c in containers

|

||||||

|

if not is_image_whitelisted(c, IMAGES_NO_STOP_REQUIRED)

|

||||||

|

]

|

||||||

|

|

||||||

|

if not_whitelisted:

|

||||||

|

print(f"Containers requiring stop because they are not whitelisted: {', '.join(not_whitelisted)}")

|

||||||

|

return True

|

||||||

|

|

||||||

|

return False

|

||||||

|

|

||||||

|

def create_volume_directory(volume_name):

|

||||||

|

"""Create necessary directories for backup."""

|

||||||

|

path = os.path.join(VERSION_DIR, volume_name)

|

||||||

|

pathlib.Path(path).mkdir(parents=True, exist_ok=True)

|

||||||

|

return path

|

||||||

|

|

||||||

|

def is_image_ignored(container):

|

||||||

|

"""Check if the container's image is one of the ignored images."""

|

||||||

|

return any(has_image(container, img) for img in IMAGES_NO_BACKUP_REQUIRED)

|

||||||

|

|

||||||

|

def backup_with_containers_paused(volume_name, volume_dir, containers, shutdown):

|

||||||

|

change_containers_status(containers, 'stop')

|

||||||

|

backup_volume(volume_name, volume_dir)

|

||||||

|

if not shutdown:

|

||||||

|

change_containers_status(containers, 'start')

|

||||||

|

|

||||||

|

def backup_mariadb_or_postgres(container, volume_dir):

|

||||||

|

"""Performs database image specific backup procedures"""

|

||||||

|

for img in ['mariadb', 'postgres']:

|

||||||

|

if has_image(container, img):

|

||||||

|

backup_database(container, volume_dir, img)

|

||||||

|

return True

|

||||||

|

return False

|

||||||

|

|

||||||

|

def default_backup_routine_for_volume(volume_name, containers, shutdown):

|

||||||

|

"""Perform backup routine for a given volume."""

|

||||||

|

vol_dir = ""

|

||||||

|

for c in containers:

|

||||||

|

if is_image_ignored(c):

|

||||||

|

print(f"Ignoring volume '{volume_name}' linked to container '{c}'.")

|

||||||

|

continue

|

||||||

|

vol_dir = create_volume_directory(volume_name)

|

||||||

|

if backup_mariadb_or_postgres(c, vol_dir):

|

||||||

|

return

|

||||||

|

if vol_dir:

|

||||||

|

backup_volume(volume_name, vol_dir)

|

||||||

|

if is_container_stop_required(containers):

|

||||||

|

backup_with_containers_paused(volume_name, vol_dir, containers, shutdown)

|

||||||

|

|

||||||

|

def backup_everything(volume_name, containers, shutdown):

|

||||||

|

"""Perform file backup routine for a given volume."""

|

||||||

|

vol_dir = create_volume_directory(volume_name)

|

||||||

|

for c in containers:

|

||||||

|

backup_mariadb_or_postgres(c, vol_dir)

|

||||||

|

backup_volume(volume_name, vol_dir)

|

||||||

|

backup_with_containers_paused(volume_name, vol_dir, containers, shutdown)

|

||||||

|

|

||||||

|

def hard_restart_docker_services(dir_path):

|

||||||

|

"""Perform a hard restart of docker-compose services in the given directory."""

|

||||||

|

try:

|

||||||

|

print(f"Performing hard restart for docker-compose services in: {dir_path}")

|

||||||

|

subprocess.run(["docker-compose", "down"], cwd=dir_path, check=True)

|

||||||

|

subprocess.run(["docker-compose", "up", "-d"], cwd=dir_path, check=True)

|

||||||

|

print(f"Hard restart completed successfully in: {dir_path}")

|

||||||

|

except subprocess.CalledProcessError as e:

|

||||||

|

print(f"Error during hard restart in {dir_path}: {e}")

|

||||||

|

exit(2)

|

||||||

|

|

||||||

|

def handle_docker_compose_services(parent_directory):

|

||||||

|

"""Iterate through directories and restart or hard restart services as needed."""

|

||||||

|

for entry in os.scandir(parent_directory):

|

||||||

|

if entry.is_dir():

|

||||||

|

dir_path = entry.path

|

||||||

|

name = os.path.basename(dir_path)

|

||||||

|

print(f"Checking directory: {dir_path}")

|

||||||

|

compose_file = os.path.join(dir_path, "docker-compose.yml")

|

||||||

|

if os.path.isfile(compose_file):

|

||||||

|

print(f"Found docker-compose.yml in {dir_path}.")

|

||||||

|

if name in DOCKER_COMPOSE_HARD_RESTART_REQUIRED:

|

||||||

|

print(f"Directory {name} detected. Performing hard restart...")

|

||||||

|

hard_restart_docker_services(dir_path)

|

||||||

|

else:

|

||||||

|

print(f"No restart required for services in {dir_path}...")

|

||||||

|

else:

|

||||||

|

print(f"No docker-compose.yml found in {dir_path}. Skipping.")

|

||||||

|

|

||||||

|

def main():

|

||||||

|

global DATABASE_CONTAINERS, IMAGES_NO_STOP_REQUIRED

|

||||||

|

parser = argparse.ArgumentParser(description='Backup Docker volumes.')

|

||||||

|

parser.add_argument('--everything', action='store_true',

|

||||||

|

help='Force file backup for all volumes and additional execute database dumps')

|

||||||

|

parser.add_argument('--shutdown', action='store_true',

|

||||||

|

help='Doesn\'t restart containers after backup')

|

||||||

|

parser.add_argument('--compose-dir', type=str, required=True,

|

||||||

|

help='Path to the parent directory containing docker-compose setups')

|

||||||

|

parser.add_argument(

|

||||||

|

'--database-containers',

|

||||||

|

nargs='+',

|

||||||

|

required=True,

|

||||||

|

help='List of container names treated as special instances for database backups'

|

||||||

|

)

|

||||||

|

parser.add_argument(

|

||||||

|

'--images-no-stop-required',

|

||||||

|

nargs='+',

|

||||||

|

required=True,

|

||||||

|

help='List of image names for which containers should not be stopped during file backup'

|

||||||

|

)

|

||||||

|

parser.add_argument(

|

||||||

|

'--images-no-backup-required',

|

||||||

|

nargs='+',

|

||||||

|

help='List of image names for which no backup should be performed (optional)'

|

||||||

|

)

|

||||||

|

args = parser.parse_args()

|

||||||

|

DATABASE_CONTAINERS = args.database_containers

|

||||||

|

IMAGES_NO_STOP_REQUIRED = args.images_no_stop_required

|

||||||

|

if args.images_no_backup_required is not None:

|

||||||

|

global IMAGES_NO_BACKUP_REQUIRED

|

||||||

|

IMAGES_NO_BACKUP_REQUIRED = args.images_no_backup_required

|

||||||

|

|

||||||

|

print('💾 Start volume backups...', flush=True)

|

||||||

|

volume_names = execute_shell_command("docker volume ls --format '{{.Name}}'")

|

||||||

|

for volume_name in volume_names:

|

||||||

|

print(f'Start backup routine for volume: {volume_name}')

|

||||||

|

containers = execute_shell_command(

|

||||||

|

f"docker ps --filter volume=\"{volume_name}\" --format '{{{{.Names}}}}'"

|

||||||

|

)

|

||||||

|

if args.everything:

|

||||||

|

backup_everything(volume_name, containers, args.shutdown)

|

||||||

|

else:

|

||||||

|

default_backup_routine_for_volume(volume_name, containers, args.shutdown)

|

||||||

|

|

||||||

|

stamp_directory()

|

||||||

|

print('Finished volume backups.')

|

||||||

|

|

||||||

|

print('Handling Docker Compose services...')

|

||||||

|

handle_docker_compose_services(args.compose_dir)

|

||||||

|

|

||||||

|

if __name__ == "__main__":

|

||||||

|

main()

|

||||||

|

|||||||

50

database_entry_seeder.py

Normal file

50

database_entry_seeder.py

Normal file

@@ -0,0 +1,50 @@

|

|||||||

|

import pandas as pd

|

||||||

|

import argparse

|

||||||

|

import os

|

||||||

|

|

||||||

|

def check_and_add_entry(file_path, instance, database, username, password):

|

||||||

|

# Check if the file exists and is not empty

|

||||||

|

if os.path.exists(file_path) and os.path.getsize(file_path) > 0:

|

||||||

|

# Read the existing CSV file with header

|

||||||

|

df = pd.read_csv(file_path, sep=';')

|

||||||

|

else:

|

||||||

|

# Create a new DataFrame with columns if file does not exist

|

||||||

|

df = pd.DataFrame(columns=['instance', 'database', 'username', 'password'])

|

||||||

|

|

||||||

|

# Check if the entry exists and remove it

|

||||||

|

mask = (

|

||||||

|

(df['instance'] == instance) &

|

||||||

|

((df['database'] == database) |

|

||||||

|

(((df['database'].isna()) | (df['database'] == '')) & (database == ''))) &

|

||||||

|

(df['username'] == username)

|

||||||

|

)

|

||||||

|

|

||||||

|

if not df[mask].empty:

|

||||||

|

print("Replacing existing entry.")

|

||||||

|

df = df[~mask]

|

||||||

|

else:

|

||||||

|

print("Adding new entry.")

|

||||||

|

|

||||||

|

# Create a new DataFrame for the new entry

|

||||||

|

new_entry = pd.DataFrame([{'instance': instance, 'database': database, 'username': username, 'password': password}])

|

||||||

|

|

||||||

|

# Add (or replace) the entry using concat

|

||||||

|

df = pd.concat([df, new_entry], ignore_index=True)

|

||||||

|

|

||||||

|

# Save the updated CSV file

|

||||||

|

df.to_csv(file_path, sep=';', index=False)

|

||||||

|

|

||||||

|

def main():

|

||||||

|

parser = argparse.ArgumentParser(description="Check and replace (or add) a database entry in a CSV file.")

|

||||||

|

parser.add_argument("file_path", help="Path to the CSV file")

|

||||||

|

parser.add_argument("instance", help="Database instance")

|

||||||

|

parser.add_argument("database", help="Database name")

|

||||||

|

parser.add_argument("username", help="Username")

|

||||||

|

parser.add_argument("password", nargs='?', default="", help="Password (optional)")

|

||||||

|

|

||||||

|

args = parser.parse_args()

|

||||||

|

|

||||||

|

check_and_add_entry(args.file_path, args.instance, args.database, args.username, args.password)

|

||||||

|

|

||||||

|

if __name__ == "__main__":

|

||||||

|

main()

|

||||||

@@ -8,13 +8,50 @@ fi

|

|||||||

|

|

||||||

volume_name="$1" # Volume-Name

|

volume_name="$1" # Volume-Name

|

||||||

backup_hash="$2" # Hashed Machine ID

|

backup_hash="$2" # Hashed Machine ID

|

||||||

version="$3" # version to backup

|

version="$3" # version to recover

|

||||||

container="${4:-}" # optional

|

|

||||||

mysql_root_password="${5:-}" # optional

|

# DATABASE PARAMETERS

|

||||||

database="${6:-}" # optional

|

database_type="$4" # Valid values; mariadb, postgress

|

||||||

|

database_container="$5" # optional

|

||||||

|

database_password="$6" # optional

|

||||||

|

database_name="$7" # optional

|

||||||

|

database_user="$database_name"

|

||||||

|

|

||||||

|

|

||||||

backup_folder="Backups/$backup_hash/backup-docker-to-local/$version/$volume_name"

|

backup_folder="Backups/$backup_hash/backup-docker-to-local/$version/$volume_name"

|

||||||

backup_files="/$backup_folder/files"

|

backup_files="/$backup_folder/files"

|

||||||

backup_sql="/$backup_folder/sql/backup.sql"

|

backup_sql="/$backup_folder/sql/$database_name.backup.sql"

|

||||||

|

|

||||||

|

# DATABASE RECOVERY

|

||||||

|

|

||||||

|

if [ ! -z "$database_type" ]; then

|

||||||

|

if [ "$database_type" = "postgres" ]; then

|

||||||

|

if [ -n "$database_container" ] && [ -n "$database_password" ] && [ -n "$database_name" ]; then

|

||||||

|

echo "Recover PostgreSQL dump"

|

||||||

|

export PGPASSWORD="$database_password"

|

||||||

|

cat "$backup_sql" | docker exec -i "$database_container" psql -v ON_ERROR_STOP=1 -U "$database_user" -d "$database_name"

|

||||||

|

if [ $? -ne 0 ]; then

|

||||||

|

echo "ERROR: Failed to recover PostgreSQL dump"

|

||||||

|

exit 1

|

||||||

|

fi

|

||||||

|

exit 0

|

||||||

|

fi

|

||||||

|

elif [ "$database_type" = "mariadb" ]; then

|

||||||

|

if [ -n "$database_container" ] && [ -n "$database_password" ] && [ -n "$database_name" ]; then

|

||||||

|

echo "recover mysql dump"

|

||||||

|

cat "$backup_sql" | docker exec -i "$database_container" mariadb -u "$database_user" --password="$database_password" "$database_name"

|

||||||

|

if [ $? -ne 0 ]; then

|

||||||

|

echo "ERROR: Failed to recover mysql dump"

|

||||||

|

exit 1

|

||||||

|

fi

|

||||||

|

exit 0

|

||||||

|

fi

|

||||||

|

fi

|

||||||

|

echo "A database backup exists, but a parameter is missing."

|

||||||

|

exit 1

|

||||||

|

fi

|

||||||

|

|

||||||

|

# FILE RECOVERY

|

||||||

|

|

||||||

echo "Inspect volume $volume_name"

|

echo "Inspect volume $volume_name"

|

||||||

docker volume inspect "$volume_name"

|

docker volume inspect "$volume_name"

|

||||||

@@ -31,19 +68,6 @@ else

|

|||||||

fi

|

fi

|

||||||

fi

|

fi

|

||||||

|

|

||||||

if [ -f "$backup_sql" ]; then

|

|

||||||

if [ -n "$container" ] && [ -n "$mysql_root_password" ] && [ -n "$database" ]; then

|

|

||||||

echo "recover mysql dump"

|

|

||||||

cat "$backup_sql" | docker exec -i "$container" mariadb -u root --password="$mysql_root_password" "$database"

|

|

||||||

if [ $? -ne 0 ]; then

|

|

||||||

echo "ERROR: Failed to recover mysql dump"

|

|

||||||

exit 1

|

|

||||||

fi

|

|

||||||

exit 0

|

|

||||||

fi

|

|

||||||

echo "A database backup exists, but a parameter is missing. Files will be recovered instead."

|

|

||||||

fi

|

|

||||||

|

|

||||||

if [ -d "$backup_files" ]; then

|

if [ -d "$backup_files" ]; then

|

||||||

echo "recover files"

|

echo "recover files"

|

||||||

docker run --rm -v "$volume_name:/recover/" -v "$backup_files:/backup/" "kevinveenbirkenbach/alpine-rsync" sh -c "rsync -avv --delete /backup/ /recover/"

|

docker run --rm -v "$volume_name:/recover/" -v "$backup_files:/backup/" "kevinveenbirkenbach/alpine-rsync" sh -c "rsync -avv --delete /backup/ /recover/"

|

||||||

|

|||||||

3

requirements.yml

Normal file

3

requirements.yml

Normal file

@@ -0,0 +1,3 @@

|

|||||||

|

pacman:

|

||||||

|

- lsof

|

||||||

|

- python-pandas

|

||||||

170

restore_backup.py

Normal file

170

restore_backup.py

Normal file

@@ -0,0 +1,170 @@

|

|||||||

|

#!/usr/bin/env python3

|

||||||

|

# @todo Not tested yet. Needs to be tested

|

||||||

|

"""

|

||||||

|

restore_backup.py

|

||||||

|

|

||||||

|

A script to recover Docker volumes and database dumps from local backups.

|

||||||

|

Supports an --empty flag to clear the database objects before import (drops all tables/functions etc.).

|

||||||

|

"""

|

||||||

|

import argparse

|

||||||

|

import os

|

||||||

|

import sys

|

||||||

|

import subprocess

|

||||||

|

|

||||||

|

|

||||||

|

def run_command(cmd, capture_output=False, input=None, **kwargs):

|

||||||

|

"""Run a subprocess command and handle errors."""

|

||||||

|

try:

|

||||||

|

result = subprocess.run(cmd, check=True, capture_output=capture_output, input=input, **kwargs)

|

||||||

|

return result

|

||||||

|

except subprocess.CalledProcessError as e:

|

||||||

|

print(f"ERROR: Command '{' '.join(cmd)}' failed with exit code {e.returncode}")

|

||||||

|

if e.stdout:

|

||||||

|

print(e.stdout.decode())

|

||||||

|

if e.stderr:

|

||||||

|

print(e.stderr.decode())

|

||||||

|

sys.exit(1)

|

||||||

|

|

||||||

|

|

||||||

|

def recover_postgres(container, password, db_name, user, backup_sql, empty=False):

|

||||||

|

print("Recovering PostgreSQL dump...")

|

||||||

|

os.environ['PGPASSWORD'] = password

|

||||||

|

if empty:

|

||||||

|

print("Dropping existing PostgreSQL objects...")

|

||||||

|

# Drop all tables, views, sequences, functions in public schema

|

||||||

|

drop_sql = """

|

||||||

|

DO $$ DECLARE r RECORD;

|

||||||

|

BEGIN

|

||||||

|

FOR r IN (

|

||||||

|

SELECT table_name AS name, 'TABLE' AS type FROM information_schema.tables WHERE table_schema='public'

|

||||||

|

UNION ALL

|

||||||

|

SELECT routine_name AS name, 'FUNCTION' AS type FROM information_schema.routines WHERE specific_schema='public'

|

||||||

|

UNION ALL

|

||||||

|

SELECT sequence_name AS name, 'SEQUENCE' AS type FROM information_schema.sequences WHERE sequence_schema='public'

|

||||||

|

) LOOP

|

||||||

|

-- Use %s for type to avoid quoting the SQL keyword

|

||||||

|

EXECUTE format('DROP %s public.%I CASCADE', r.type, r.name);

|

||||||

|

END LOOP;

|

||||||

|

END

|

||||||

|

$$;

|

||||||

|

"""

|

||||||

|

run_command([

|

||||||

|

'docker', 'exec', '-i', container,

|

||||||

|

'psql', '-v', 'ON_ERROR_STOP=1', '-U', user, '-d', db_name

|

||||||

|

], input=drop_sql.encode())

|

||||||

|

print("Existing objects dropped.")

|

||||||

|

print("Importing the dump...")

|

||||||

|

with open(backup_sql, 'rb') as f:

|

||||||

|

run_command([

|

||||||

|

'docker', 'exec', '-i', container,

|

||||||

|

'psql', '-v', 'ON_ERROR_STOP=1', '-U', user, '-d', db_name

|

||||||

|

], stdin=f)

|

||||||

|

print("PostgreSQL recovery complete.")

|

||||||

|

|

||||||

|

|

||||||

|

def recover_mariadb(container, password, db_name, user, backup_sql, empty=False):

|

||||||

|

print("Recovering MariaDB dump...")

|

||||||

|

if empty:

|

||||||

|

print("Dropping existing MariaDB tables...")

|

||||||

|

# Disable foreign key checks

|

||||||

|

run_command([

|

||||||

|

'docker', 'exec', container,

|

||||||

|

'mysql', '-u', user, f"--password={password}", '-e', 'SET FOREIGN_KEY_CHECKS=0;'

|

||||||

|

])

|

||||||

|

# Get all table names

|

||||||

|

result = run_command([

|

||||||

|

'docker', 'exec', container,

|

||||||

|

'mysql', '-u', user, f"--password={password}", '-N', '-e',

|

||||||

|

f"SELECT table_name FROM information_schema.tables WHERE table_schema = '{db_name}';"

|

||||||

|

], capture_output=True)

|

||||||

|

tables = result.stdout.decode().split()

|

||||||

|

for tbl in tables:

|

||||||

|

run_command([

|

||||||

|

'docker', 'exec', container,

|

||||||

|

'mysql', '-u', user, f"--password={password}", '-e',

|

||||||

|

f"DROP TABLE IF EXISTS `{db_name}`.`{tbl}`;"

|

||||||

|

])

|

||||||

|

# Enable foreign key checks

|

||||||

|

run_command([

|

||||||

|

'docker', 'exec', container,

|

||||||

|

'mysql', '-u', user, f"--password={password}", '-e', 'SET FOREIGN_KEY_CHECKS=1;'

|

||||||

|

])

|

||||||

|

print("Existing tables dropped.")

|

||||||

|

print("Importing the dump...")

|

||||||

|

with open(backup_sql, 'rb') as f:

|

||||||

|

run_command([

|

||||||

|

'docker', 'exec', '-i', container,

|

||||||

|

'mariadb', '-u', user, f"--password={password}", db_name

|

||||||

|

], stdin=f)

|

||||||

|

print("MariaDB recovery complete.")

|

||||||

|

|

||||||

|

|

||||||

|

def recover_files(volume_name, backup_files):

|

||||||

|

print(f"Inspecting volume {volume_name}...")

|

||||||

|

inspect = subprocess.run(['docker', 'volume', 'inspect', volume_name], stdout=subprocess.DEVNULL)

|

||||||

|

if inspect.returncode != 0:

|

||||||

|

print(f"Volume {volume_name} does not exist. Creating...")

|

||||||

|

run_command(['docker', 'volume', 'create', volume_name])

|

||||||

|

else:

|

||||||

|

print(f"Volume {volume_name} already exists.")

|

||||||

|

|

||||||

|

if not os.path.isdir(backup_files):

|

||||||

|

print(f"ERROR: Backup files folder '{backup_files}' does not exist.")

|

||||||

|

sys.exit(1)

|

||||||

|

|

||||||

|

print("Recovering files...")

|

||||||

|

run_command([

|

||||||

|

'docker', 'run', '--rm',

|

||||||

|

'-v', f"{volume_name}:/recover/",

|

||||||

|

'-v', f"{backup_files}:/backup/",

|

||||||

|

'kevinveenbirkenbach/alpine-rsync',

|

||||||

|

'sh', '-c', 'rsync -avv --delete /backup/ /recover/'

|

||||||

|

])

|

||||||

|

print("File recovery complete.")

|

||||||

|

|

||||||

|

|

||||||

|

def main():

|

||||||

|

parser = argparse.ArgumentParser(

|

||||||

|

description='Recover Docker volumes and database dumps from local backups.'

|

||||||

|

)

|

||||||

|

parser.add_argument('volume_name', help='Name of the Docker volume')

|

||||||

|

parser.add_argument('backup_hash', help='Hashed Machine ID')

|

||||||

|

parser.add_argument('version', help='Version to recover')

|

||||||

|

|

||||||

|

parser.add_argument('--db-type', choices=['postgres', 'mariadb'], help='Type of database backup')

|

||||||

|

parser.add_argument('--db-container', help='Docker container name for the database')

|

||||||

|

parser.add_argument('--db-password', help='Password for the database user')

|

||||||

|

parser.add_argument('--db-name', help='Name of the database')

|

||||||

|

parser.add_argument('--empty', action='store_true', help='Drop existing database objects before importing')

|

||||||

|

|

||||||

|

args = parser.parse_args()

|

||||||

|

|

||||||

|

volume = args.volume_name

|

||||||

|

backup_hash = args.backup_hash

|

||||||

|

version = args.version

|

||||||

|

|

||||||

|

backup_folder = os.path.join('Backups', backup_hash, 'backup-docker-to-local', version, volume)

|

||||||

|

backup_files = os.path.join(os.sep, backup_folder, 'files')

|

||||||

|

backup_sql = None

|

||||||

|

if args.db_name:

|

||||||

|

backup_sql = os.path.join(os.sep, backup_folder, 'sql', f"{args.db_name}.backup.sql")

|

||||||

|

|

||||||

|

# Database recovery

|

||||||

|

if args.db_type:

|

||||||

|

if not (args.db_container and args.db_password and args.db_name):

|

||||||

|

print("ERROR: A database backup exists, aber ein Parameter fehlt.")

|

||||||

|

sys.exit(1)

|

||||||

|

|

||||||

|

user = args.db_name

|

||||||

|

if args.db_type == 'postgres':

|

||||||

|

recover_postgres(args.db_container, args.db_password, args.db_name, user, backup_sql, empty=args.empty)

|

||||||

|

else:

|

||||||

|

recover_mariadb(args.db_container, args.db_password, args.db_name, user, backup_sql, empty=args.empty)

|

||||||

|

sys.exit(0)

|

||||||

|

|

||||||

|

# File recovery

|

||||||

|

recover_files(volume, backup_files)

|

||||||

|

|

||||||

|

|

||||||

|

if __name__ == '__main__':

|

||||||

|

main()

|

||||||

96

restore_postgres_databases.py

Normal file

96

restore_postgres_databases.py

Normal file

@@ -0,0 +1,96 @@

|

|||||||

|

#!/usr/bin/env python3

|

||||||

|

"""

|

||||||

|

Restore multiple PostgreSQL databases from .backup.sql files via a Docker container.

|

||||||

|

|

||||||

|

Usage:

|

||||||

|

./restore_databases.py /path/to/backup_dir container_name

|

||||||

|

"""

|

||||||

|

import argparse

|

||||||

|

import subprocess

|

||||||

|

import sys

|

||||||

|

import os

|

||||||

|

import glob

|

||||||

|

|

||||||

|

def run_command(cmd, stdin=None):

|

||||||

|

"""

|

||||||

|

Run a subprocess command and abort immediately on any failure.

|

||||||

|

:param cmd: list of command parts

|

||||||

|

:param stdin: file-like object to use as stdin

|

||||||

|

"""

|

||||||

|

subprocess.run(cmd, stdin=stdin, check=True)

|

||||||

|

|

||||||

|

|

||||||

|

def main():

|

||||||

|

parser = argparse.ArgumentParser(

|

||||||

|

description="Restore Postgres databases from backup SQL files via Docker container."

|

||||||

|

)

|

||||||

|

parser.add_argument(

|

||||||

|

"backup_dir",

|

||||||

|

help="Path to directory containing .backup.sql files"

|

||||||

|

)

|

||||||

|

parser.add_argument(

|

||||||

|

"container",

|

||||||

|

help="Name of the Postgres Docker container"

|

||||||

|

)

|

||||||

|

args = parser.parse_args()

|

||||||

|

|

||||||

|

backup_dir = args.backup_dir

|

||||||

|

container = args.container

|

||||||

|

|

||||||

|

pattern = os.path.join(backup_dir, "*.backup.sql")

|

||||||

|

sql_files = sorted(glob.glob(pattern))

|

||||||

|

if not sql_files:

|

||||||

|

print(f"No .backup.sql files found in {backup_dir}", file=sys.stderr)

|

||||||

|

sys.exit(1)

|

||||||

|

|

||||||

|

for sqlfile in sql_files:

|

||||||

|

# Extract database name by stripping the full suffix '.backup.sql'

|

||||||

|

filename = os.path.basename(sqlfile)

|

||||||

|

if not filename.endswith('.backup.sql'):

|

||||||

|

continue

|

||||||

|

dbname = filename[:-len('.backup.sql')]

|

||||||

|

print(f"=== Processing {sqlfile} → database: {dbname} ===")

|

||||||

|

|

||||||

|

# Drop the database, forcing disconnect of sessions if necessary

|

||||||

|

run_command([

|

||||||

|

"docker", "exec", "-i", container,

|

||||||

|

"psql", "-U", "postgres", "-c",

|

||||||

|

f"DROP DATABASE IF EXISTS \"{dbname}\" WITH (FORCE);"

|

||||||

|

])

|

||||||

|

|

||||||

|

# Create a fresh database

|

||||||

|

run_command([

|

||||||

|

"docker", "exec", "-i", container,

|

||||||

|

"psql", "-U", "postgres", "-c",

|

||||||

|

f"CREATE DATABASE \"{dbname}\";"

|

||||||

|

])

|

||||||

|

|

||||||

|

# Ensure the ownership role exists

|

||||||

|

print(f"Ensuring role '{dbname}' exists...")

|

||||||

|

run_command([

|

||||||

|

"docker", "exec", "-i", container,

|

||||||

|

"psql", "-U", "postgres", "-c",

|

||||||

|

(

|

||||||

|

"DO $$BEGIN "

|

||||||

|

f"IF NOT EXISTS (SELECT FROM pg_roles WHERE rolname = '{dbname}') THEN "

|

||||||

|

f"CREATE ROLE \"{dbname}\"; "

|

||||||

|

"END IF; "

|

||||||

|

"END$$;"

|

||||||

|

)

|

||||||

|

])

|

||||||

|

|

||||||

|

# Restore the dump into the database by streaming file (will abort on first error)

|

||||||

|

print(f"Restoring dump into {dbname} (this may take a while)…")

|

||||||

|

with open(sqlfile, 'rb') as infile:

|

||||||

|

run_command([

|

||||||

|

"docker", "exec", "-i", container,

|

||||||

|

"psql", "-U", "postgres", "-d", dbname

|

||||||

|

], stdin=infile)

|

||||||

|

|

||||||

|

print(f"✔ {dbname} restored.")

|

||||||

|

|

||||||

|

print("All databases have been restored.")

|

||||||

|

|

||||||

|

|

||||||

|

if __name__ == "__main__":

|

||||||

|

main()

|

||||||

0

tests/__init__.py

Normal file

0

tests/__init__.py

Normal file

0

tests/unit/__init__.py

Normal file

0

tests/unit/__init__.py

Normal file

64

tests/unit/test_backup.py

Normal file

64

tests/unit/test_backup.py

Normal file

@@ -0,0 +1,64 @@

|

|||||||

|

# tests/unit/test_backup.py

|

||||||

|

|

||||||

|

import unittest

|

||||||

|

from unittest.mock import patch

|

||||||

|

import importlib.util

|

||||||

|

import sys

|

||||||

|

import os

|

||||||

|

import pathlib

|

||||||

|

|

||||||

|

# Prevent actual directory creation in backup script import

|

||||||

|

dummy_mkdir = lambda self, *args, **kwargs: None

|

||||||

|

original_mkdir = pathlib.Path.mkdir

|

||||||

|

pathlib.Path.mkdir = dummy_mkdir

|

||||||

|

|

||||||

|

# Create a virtual databases.csv in the project root for the module import

|

||||||

|

test_dir = os.path.dirname(__file__)

|

||||||

|

project_root = os.path.abspath(os.path.join(test_dir, '../../'))

|

||||||

|

sys.path.insert(0, project_root)

|

||||||

|

db_csv_path = os.path.join(project_root, 'databases.csv')

|

||||||

|

with open(db_csv_path, 'w') as f:

|

||||||

|

f.write('instance;database;username;password\n')

|

||||||

|

|

||||||

|

# Dynamically load the hyphenated script as module 'backup'

|

||||||

|

script_path = os.path.join(project_root, 'backup-docker-to-local.py')

|

||||||

|

spec = importlib.util.spec_from_file_location('backup', script_path)

|

||||||

|

backup = importlib.util.module_from_spec(spec)

|

||||||

|

sys.modules['backup'] = backup

|

||||||

|

spec.loader.exec_module(backup)

|

||||||

|

|

||||||

|

# Restore original mkdir

|

||||||

|

pathlib.Path.mkdir = original_mkdir

|

||||||

|

|

||||||

|

class TestIsImageWhitelisted(unittest.TestCase):

|

||||||

|

@patch('backup.get_image_info')

|

||||||

|

def test_returns_true_when_image_matches(self, mock_get_image_info):

|

||||||

|

# Simulate a container image containing 'mastodon'

|

||||||

|

mock_get_image_info.return_value = ['repo/mastodon:v4']

|

||||||

|

images = ['mastodon', 'wordpress']

|

||||||

|

self.assertTrue(

|

||||||

|

backup.is_image_whitelisted('any_container', images),

|

||||||

|

"Should return True when at least one image substring matches"

|

||||||

|

)

|

||||||

|

|

||||||

|

@patch('backup.get_image_info')

|

||||||

|

def test_returns_false_when_no_image_matches(self, mock_get_image_info):

|

||||||

|

# Simulate a container image without matching substrings

|

||||||

|

mock_get_image_info.return_value = ['repo/nginx:latest']

|

||||||

|

images = ['mastodon', 'wordpress']

|

||||||

|

self.assertFalse(

|

||||||

|

backup.is_image_whitelisted('any_container', images),

|

||||||

|

"Should return False when no image substring matches"

|

||||||